Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Humans willingly pay costs to punish free-riders who exploit group cooperation, a behavior that seems irrational but actually sustains large-scale societies through reputation effects and network enforcement mechanisms.

You're splitting the bill at a restaurant when someone who ordered the most expensive dish suddenly "forgets" their wallet. Everyone else chips in extra, but you're fuming. Here's the weird part: if given the chance, you'd probably pay actual money just to call them out or exclude them next time, even though it costs you more. That's costly punishment, and it's one of humanity's most puzzling behaviors. Why would anyone sacrifice their own resources to punish someone who wronged the group? The answer reveals something profound about how human cooperation evolved and why our societies don't collapse into chaos.

Every cooperative venture faces the same brutal math problem. Whether it's a community garden, open-source software, or national defense, the setup is identical: everyone benefits if people contribute, but each individual does better by letting others do the work. This is the free-rider problem, and it's everywhere.

In economics, a public good is something everyone can use regardless of whether they paid for it. Clean air, lighthouse beams, Wikipedia - you can't exclude non-contributors. The rational move? Don't contribute. Enjoy the benefits while everyone else foots the bill.

This logic should doom cooperation entirely. If everyone follows their self-interest, public goods experiments show that contributions collapse within a few rounds. Players start cooperating, watch others defect, then defect themselves. Within ten rounds, cooperation flatlines near zero.

Yet human societies don't collapse. We build roads, fund research, create shared resources. Something breaks the free-rider logic, and that something is punishment.

Standard enforcement makes sense. Governments fine litterers, bosses fire slackers, bouncers eject troublemakers. The punisher has authority and gets paid to enforce rules. That's not what we're talking about.

Costly punishment means you personally sacrifice resources to sanction someone, even when you gain nothing from doing so. You're not the boss. You don't get your money back. You just make the free-rider suffer, and it costs you too.

This shows up constantly in laboratory games. Give people the option to spend their own money to reduce a free-rider's payoff, and they do it. Enthusiastically. Even when it's anonymous. Even when they'll never see that person again.

Researchers call this "altruistic punishment" because it appears to help the group at the punisher's expense. The term is misleading though - people aren't punishing out of pure altruism. They're angry. They want justice. They'll pay for it.

From a Darwinian perspective, this is bonkers. Natural selection should eliminate costly punishers immediately. They spend resources without gaining anything, putting them at a disadvantage compared to free-riders who contribute nothing or cooperators who contribute without wasting money on revenge.

Yet humans across all cultures engage in costly punishment. Indigenous societies, industrial nations, collectivist cultures, individualist ones - everyone does it. The behavior is universal, which suggests it's not just cultural noise but something deeper.

The solution to this puzzle lies in group selection and reputation dynamics. Yes, punishers pay a cost. But groups with punishers thrive. When free-riders know they'll face sanctions, they contribute more. Cooperation stabilizes. The group out-competes groups without enforcement.

Over thousands of generations, genes (and cultural norms) that promote punishment spread because the groups carrying them prosper. Punishers might lose in a single interaction, but they win in the long game because they're part of successful communities.

The clearest evidence comes from public goods games, the experimental workhorse of cooperation research. Here's how they work: four players each receive tokens. They simultaneously decide how many to contribute to a group pot. The pot gets multiplied (say, by 1.6) and split equally among all players, regardless of contribution.

The selfish move is contributing zero. You get a share of others' contributions without paying anything. If everyone reasons this way, the pot stays empty and everyone earns only their initial endowment. If everyone contributes everything, the pot multiplies and everyone profits.

Standard results without punishment: contributions start around 50%, then decay to nearly zero over 10 rounds as players realize others are defecting.

Now add a punishment stage. After seeing what others contributed, players can spend tokens to reduce another player's earnings. Typically, spending one token reduces the target's earnings by three.

Results flip dramatically. Contributions jump to 80-90% and stay high. Free-riders get hammered with sanctions, learn their lesson, and start contributing. The threat alone often suffices - once people know punishment is possible, they cooperate preemptively.

Recent experiments reveal that punishment's effectiveness depends on network structure, not just its availability. It's not merely whether you can punish, but who can punish whom.

Studies with incomplete punishment networks show striking patterns. When everyone can punish everyone (complete network), contributions averaged 26 tokens out of 30. In pairwise networks where you could only punish adjacent players, contributions dropped to 14 tokens.

The difference isn't punishment capacity - it's connectivity. Higher network density means more potential punishers observing each player. Free-riders can't hide. They face multiple enforcers, raising the expected cost of defection.

Interestingly, groups with punishment don't always earn more than groups without it. Once you subtract the costs of sanctioning, earnings often equalize. Punishment sustains cooperation but wastes resources in the process. Society needs cooperation, but enforcement is expensive.

Punishment becomes dramatically more powerful when players carry reputations. Research on spatial public goods games demonstrates this beautifully.

In anonymous one-shot games, punishment helps but remains costly. Add reputation - let players know who punishes defectors - and cooperation skyrockets while actual punishment plummets. Why? Because the threat becomes credible and visible.

If you're known as someone who enforces norms, free-riders avoid you. They cooperate preemptively, so you rarely need to follow through. Reputation converts costly punishment into a cheap deterrent. You paid the cost once to build your reputation; now it works for you automatically.

This explains why gossip and social networks matter so much in human societies. Reputation systems allow enforcement to scale. You don't need to personally punish every defector if word gets around that defectors face consequences.

These findings aren't just academic curiosities. They shape how we design institutions, policies, and organizations.

Common-Pool Resources: Elinor Ostrom's Nobel Prize-winning work on common-pool resource management showed that communities sustainably manage fisheries, forests, and irrigation systems through peer monitoring and graduated sanctions. Users punish rule-breakers themselves rather than relying on distant authorities. The cost falls on the community, but so do the benefits.

Online Communities: Wikipedia, Reddit, and open-source projects rely on volunteer moderators who spend time (a cost) enforcing community norms. These platforms create reputation systems (karma, edit counts, badges) that make enforcement visible and reward prosocial behavior, amplifying the deterrent effect.

Workplace Dynamics: Team-based organizations struggle with free-riding until they implement peer feedback mechanisms. When team members can evaluate each other and those evaluations affect bonuses or assignments, contributions equalize. People will sacrifice time to give honest negative feedback if it protects the team's productivity.

Tax Compliance: Governments employ audits (costly enforcement) to deter tax evasion. But research shows that perceived audit probability matters more than actual auditing rates. Publicizing high-profile prosecutions creates a reputation for enforcement that deters widespread evasion without punishing everyone.

Climate Agreements: International cooperation on emissions faces a massive free-rider problem. Countries benefit from others' emission reductions without cutting their own. Current mechanisms rely on naming-and-shaming (reputational punishment) and conditional commitments (only participate if others do), mirroring the laboratory findings on reputation and network structure.

Costly punishment isn't always beneficial. It can reinforce harmful norms, punish whistleblowers, or enforce conformity at the expense of innovation.

Anti-Social Punishment: In some cultures, high contributors get punished for making others look bad. Experiments in societies with weak rule of law show that free-riders punish cooperators to drag them down to the group's lower standard. This "anti-social punishment" undermines cooperation entirely.

Collective Punishment: Punishing entire groups for individual violations breeds resentment and damages cooperation. Schools that punish whole classes, military units that sanction everyone for one soldier's error, or communities that ostracize families for one member's crime create injustice that outweighs any deterrent benefit.

Excessive Costs: Punishment spirals happen when people retaliate against punishers, who then counter-punish, escalating into feuds that destroy group welfare. Without institutions that limit and legitimize punishment, enforcement becomes vigilante justice.

Misaligned Incentives: If punishers can't distinguish genuine free-riders from people facing genuine constraints (illness, bad luck), punishment becomes arbitrary. This kills cooperation because contributing doesn't guarantee you'll avoid sanctions.

Understanding costly punishment lets us design better cooperation mechanisms. Several principles emerge from the research:

Make punishment credible but cheap: Reputation systems and transparency reduce the need for actual sanctioning. Public leaderboards, verified reviews, and audit trails create accountability without constant enforcement.

Match network structure to goals: Dense networks (everyone monitors everyone) work for small groups. Large organizations need hierarchical or regional structures where local monitoring combines with occasional central oversight, mimicking the incomplete punishment networks that still maintain cooperation.

Reward enforcers: Since punishment is costly, institutions that reward norm enforcement reduce the burden on individuals. Moderator status, public recognition, or material compensation makes enforcement sustainable.

Establish clear norms: Punishment only sustains cooperation when people agree on what behaviors deserve sanctions. Ambiguous norms lead to arbitrary punishment that breeds resentment. Transparent rules and community-defined standards prevent enforcement from becoming oppression.

Allow graduated responses: All-or-nothing punishment (total exclusion) is often excessive. Systems that allow warnings, temporary sanctions, and escalation let minor infractions get corrected without destroying relationships.

We live in a cooperative species sustained by a willingness to pay personal costs to punish those who violate group norms. This capacity allowed humans to form large-scale societies long before governments could enforce laws.

Your anger at the restaurant free-rider isn't irrational - it's the emotional machinery that makes cooperation possible. Those feelings, and the actions they motivate, built civilizations.

But that machinery evolved in small groups where everyone knew everyone. Scaling it to millions of strangers requires institutional innovation: legal systems that monopolize legitimate punishment, reputation platforms that make behavior visible across distances, and democratic processes that ensure enforcement serves genuine collective interests rather than tribal prejudices.

The science of costly punishment reveals a profound truth: cooperation isn't natural. It's maintained by people willing to sacrifice for collective benefit, and enforced by people willing to sacrifice again to punish those who won't. Every functioning society rests on that double sacrifice.

Next time you're tempted to let a free-rider slide, remember: your willingness to bear the cost of calling them out isn't petty. It's the foundation of everything we've built together. Without costly punishment, we'd still be small bands of relatives who can only trust close kin. The price of civilization is eternal vigilance, and sometimes, we have to pay it ourselves.

The future of cooperation depends on understanding these mechanisms better. As we face global challenges - pandemics, climate change, AI governance - that require unprecedented coordination among strangers, we'll need institutions that harness costly punishment's benefits while minimizing its costs.

Research continues into how artificial intelligence can identify free-riding patterns at scales impossible for humans, how blockchain technology can create transparent reputation systems, and how mechanism design can align individual incentives with collective welfare.

But the core insight remains: humans cooperate because we're willing to punish, even when it hurts. That willingness, shaped by millions of years of evolution and thousands of years of cultural development, is both our burden and our superpower. Understanding it better means wielding it more wisely.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

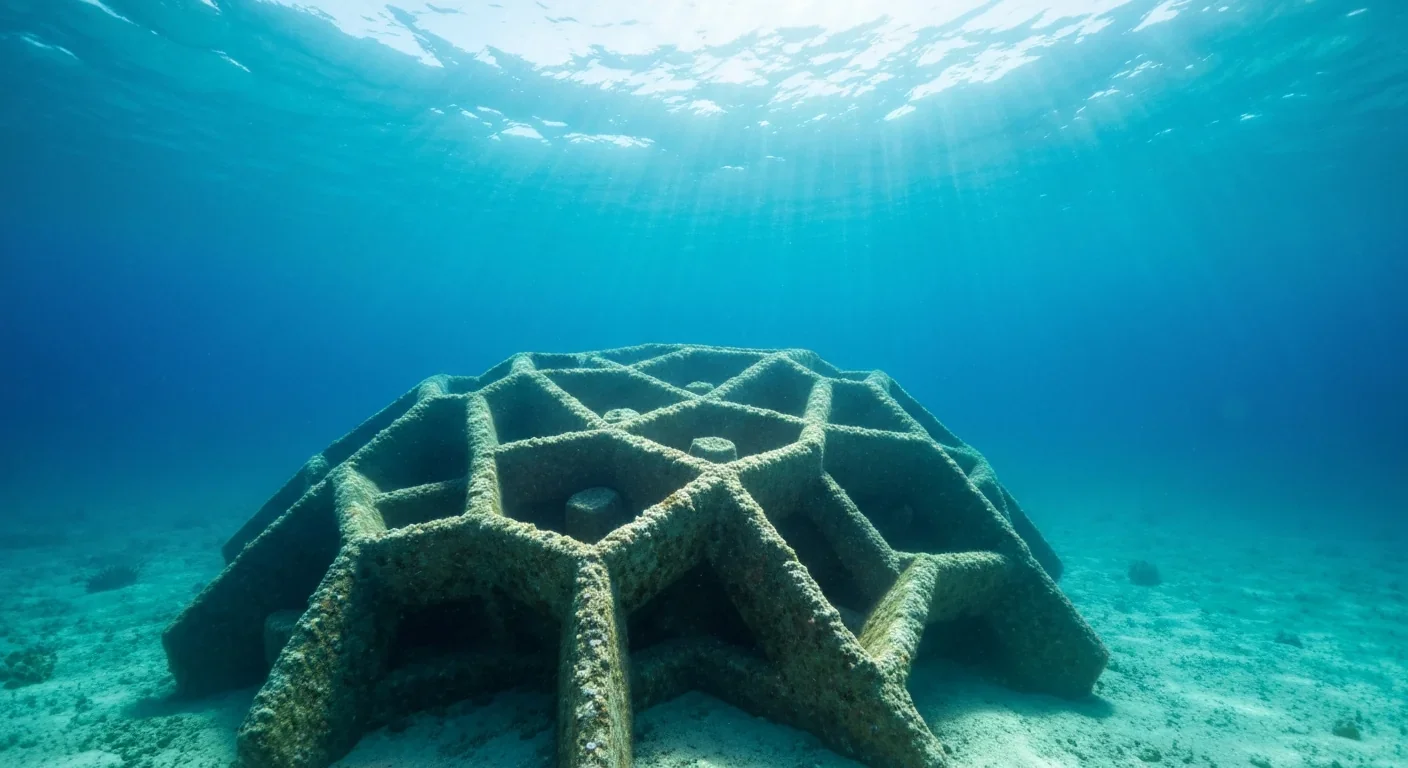

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

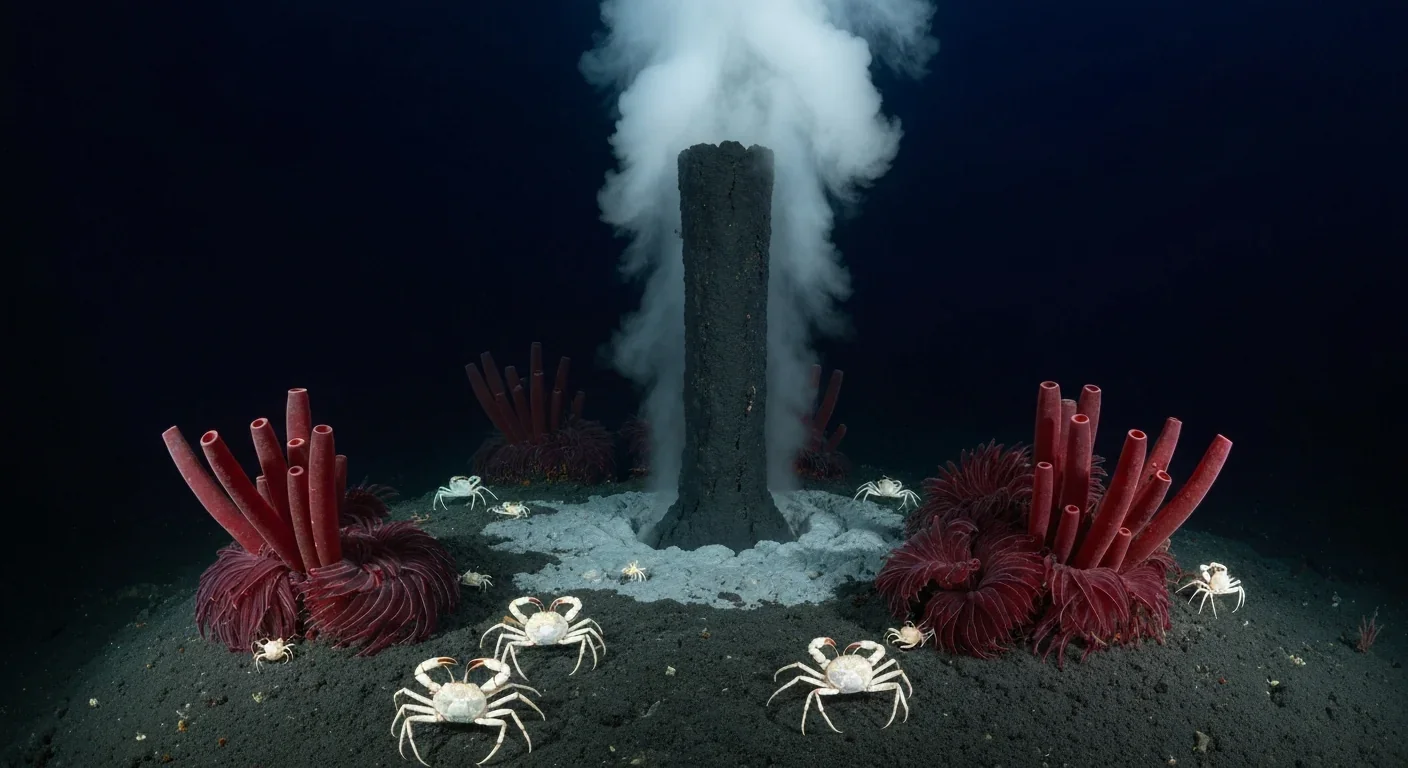

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

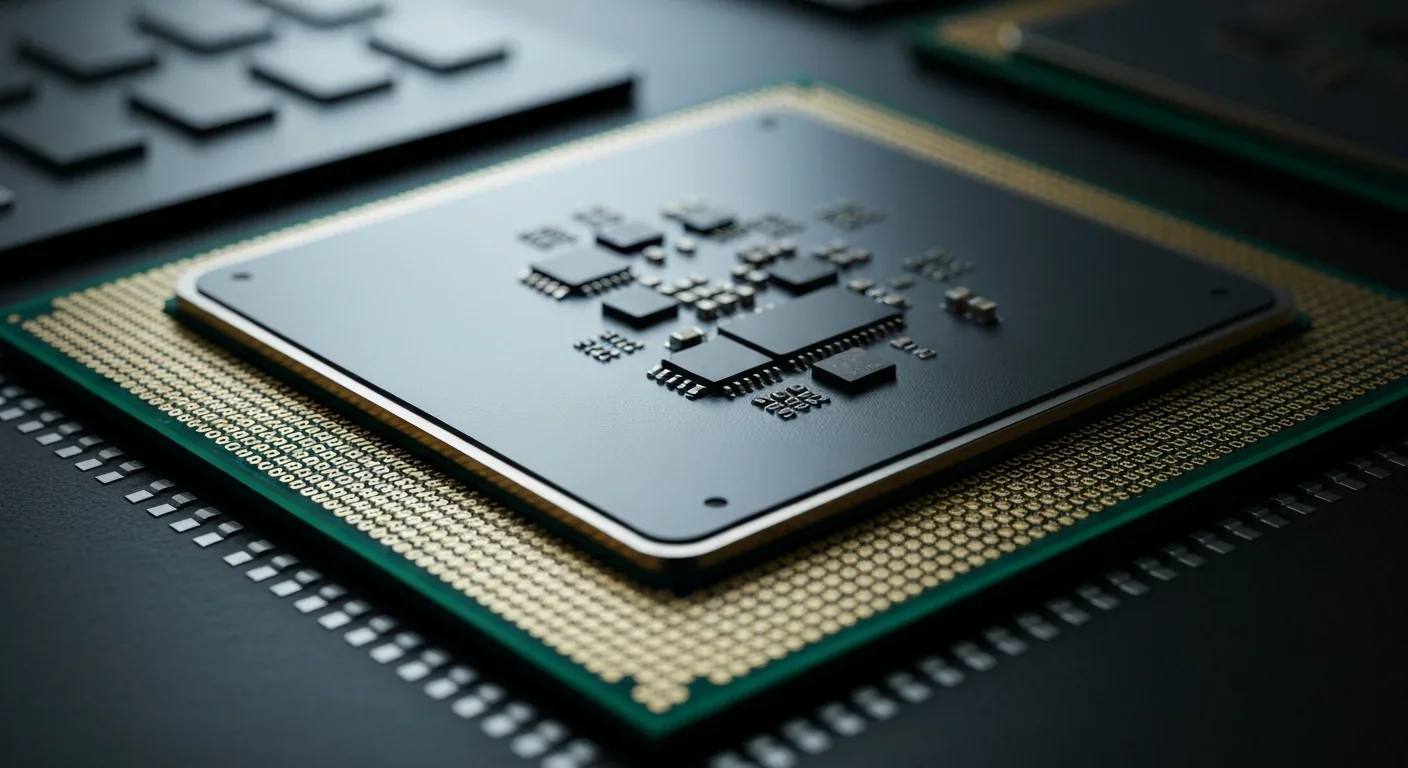

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.