Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Your brain is outsourcing memory to Google, and neuroscientists can't agree if this represents cognitive decline or evolution. The Google effect shows we remember where to find information rather than the information itself - a shift that might free cognitive resources for higher-order thinking, or lead to dangerous dependency.

You reach for your phone without thinking. Someone asks a question and before your brain fully engages, your fingers are already typing into Google. The trivia that once cluttered your mind - phone numbers, addresses, historical dates - has quietly migrated to the cloud. You're not losing your memory. You're redistributing it.

Scientists call this the Google effect, though digital amnesia sounds more ominous. First identified in 2011 by Columbia University psychologist Betsy Sparrow and her colleagues, the phenomenon describes our tendency to forget information that's readily available online. We remember where to find answers instead of the answers themselves. Our brains, it turns out, treat search engines like external hard drives.

What's remarkable isn't that this shift is happening - it's how fast it's becoming our default mode. Kaspersky research found that 32% of Europeans consider their devices an extension of their brains, while 79% report being more dependent on their devices for information than five years ago. Among Americans, 92% view smartphones as their memory. We've essentially agreed, as a species, to outsource recall.

Yet here's the twist: neuroscientists can't agree if this represents cognitive decline or cognitive evolution.

The foundational research emerged from a simple observation: people were changing what they remembered. In Sparrow's experiments, participants who believed information would be saved on a computer showed significantly poorer recall of that information. But they excelled at remembering where it was saved. The brain was making a calculated trade: sacrifice content, preserve location.

To test how automatically this happens, researchers gave people difficult trivia questions, then measured reaction times on a modified Stroop task with internet-related words. After struggling with hard questions, participants were slower to name colors of internet-related terms (818 milliseconds) compared to unrelated words (638 milliseconds). Translation: the mere act of encountering difficult questions primed people to think of computers. The internet had become so salient as an information source that it activated automatically when knowledge gaps appeared.

This isn't just behavioral - it's neural. When researchers compared brain activity between people learning facts through internet searches versus traditional encyclopedias, they found decreased activation in bilateral occipital gyrus, left temporal gyrus, and bilateral middle frontal gyrus in the internet group. These regions handle visual processing, memory consolidation, and executive function. Put simply, learning via search uses less brainpower.

More dramatically, studies of London taxi drivers who memorized city maps showed enlarged hippocampi - the brain's memory center. But when GPS navigation removed the need for memorization, that region began to shrink. Use it or lose it applies at the cellular level.

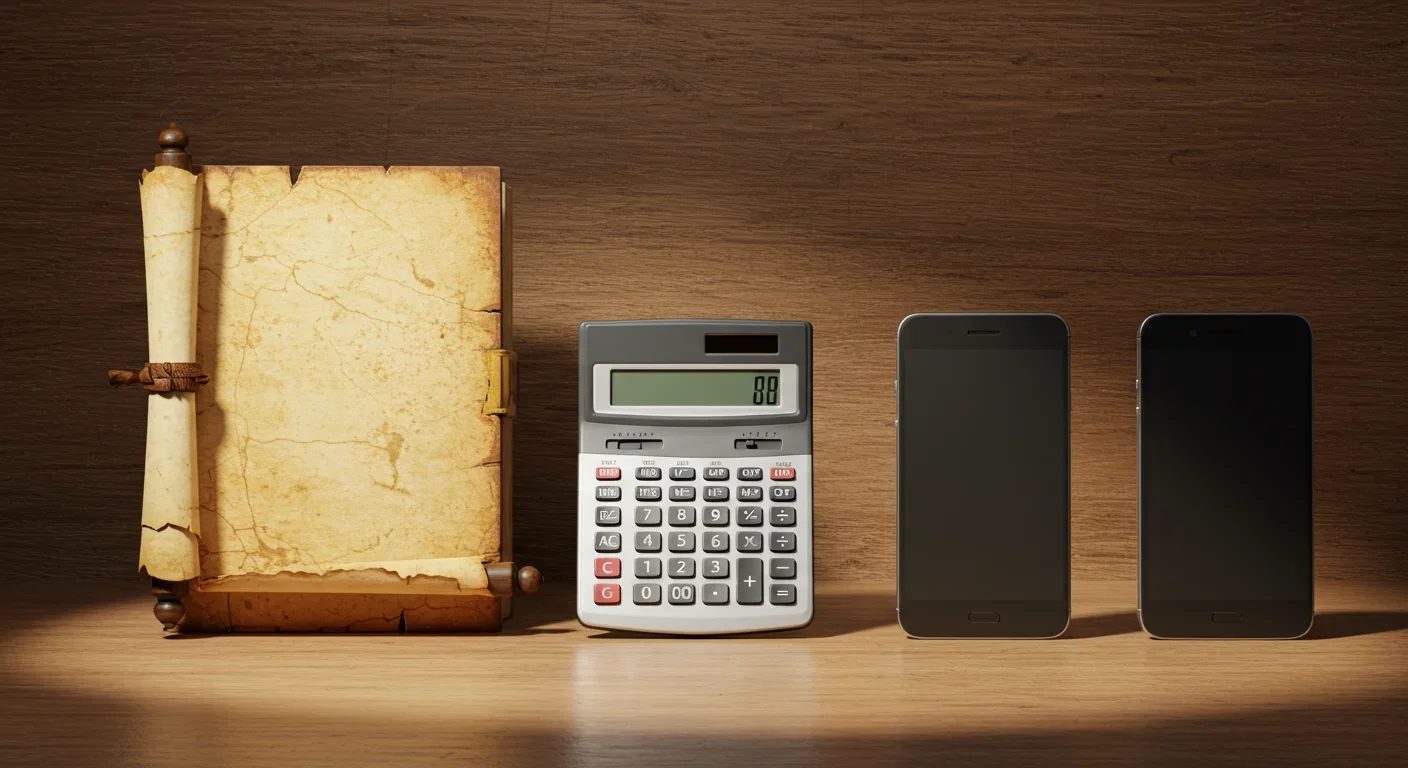

Before you panic about neural atrophy, consider that cognitive offloading isn't new - only the medium has changed. Socrates famously worried that writing would destroy memory. He was right that it changed memory, but wrong about the consequences. Literate societies didn't become stupider; they became more complex because freed cognitive resources enabled philosophy, mathematics, and legal systems that oral cultures couldn't sustain.

The printing press triggered similar anxieties. Critics warned that widespread books would eliminate the need to remember anything. They had a point. Medieval scholars who memorized entire texts were replaced by readers who knew which book contained what information. This shift from rote memorization to information navigation didn't diminish human capability - it scaled it.

Calculators offer a closer analogy. When electronic calculators became ubiquitous in the 1970s, educators predicted mathematical incompetence. Yet research found that students using calculators for computation could tackle more complex problems because they weren't bogged down in arithmetic. The cognitive resources once devoted to multiplication tables got redirected toward conceptual understanding.

Transactive memory theory, developed by psychologist Daniel Wegner in 1985, explains why. In any group - couples, teams, communities - members specialize in different knowledge domains. You don't need to remember everything; you need to remember who knows what. One partner remembers travel details, the other handles finances. Groups become smarter than individuals because they distribute knowledge efficiently.

Search engines are simply the latest - and most powerful - member of our transactive memory system.

Some neuroscientists argue that digital memory represents evolutionary adaptation, not degradation. The brain's primary job isn't to warehouse facts - it's to solve problems efficiently. From this perspective, outsourcing retrieval makes perfect sense. Why dedicate neural real estate to storing information that's universally accessible?

Consider working memory, which holds about 3-5 chunks of information simultaneously. That's the brain's RAM, and it's precious. Every fact you're actively remembering occupies space that could be used for reasoning, creativity, or pattern recognition. If search engines handle storage, working memory can focus on higher-order thinking.

More intriguingly, forgetting might be a feature, not a bug. A 2017 study published in Neuron found that the brain actively prunes memories to make room for relevant information. The researchers called this "memory transience" and argued it's essential for cognitive flexibility. A brain cluttered with outdated facts can't adapt to new environments.

So when you forget a colleague's email address because you know you can search for it, your brain might be operating exactly as designed - prioritizing navigation over storage, flexibility over rigidity.

But not all the data is reassuring. Among university students, 70% showed high smartphone addiction and 84.5% used phones as reminders. Many couldn't recall basic personal information. This goes beyond efficient memory management into something more concerning: dependency.

The problem isn't outsourcing memory - it's how that outsourcing affects deeper cognitive processes. A 2015 review on Internet-age learning concluded that digital devices can promote "mental passivity" that counteracts autonomous, creative, and critical thinking. When information comes too easily, we stop engaging with it deeply.

This shows up in studies of note-taking. Students who take handwritten notes outperform those using laptops, even when both groups have equal access to their notes during exams. The manual process of writing forces synthesis and comprehension. Digital note-taking often devolves into transcription - capturing without processing.

Even more troubling, recent research on 319 knowledge workers found that frequent users of generative AI tools experienced a decline in critical thinking skills. The more they relied on AI for analysis, the less they practiced analytical thinking themselves. The cognitive muscles atrophied from disuse.

Brain imaging studies add biological weight to these concerns. Overreliance on digital devices weakens hippocampal functions and impairs the prefrontal cortex - regions essential for memory formation and executive decision-making. There's even emerging evidence that persistent digital use might accelerate early-onset cognitive decline, with symptoms resembling mild cognitive impairment.

Here's where things get tricky: the Google effect doesn't just change what we remember - it changes what we think we know. A 2015 study from UC Santa Cruz and University of Illinois found that people who searched the internet rated their own knowledge higher on completely unrelated questions. Simply having access to search engines inflated their confidence about topics they hadn't looked up yet.

This is dangerous in contexts requiring expertise. A history professor interviewed in one study noted that students exhibit "almost complete dependence on Google and Wikipedia." The issue isn't that these resources exist - it's that students often can't distinguish between knowing how to find information and actually understanding it.

Consider GPS navigation. One study found that 80% of participants followed incorrect GPS instructions even after being explicitly warned the directions would be wrong. This is automation bias: outsourcing decisions to digital tools even when those tools are demonstrably unreliable.

The workplace implications are significant. If employees believe they can always look things up, they may not develop the deep domain expertise that enables intuitive problem-solving. Google can tell you facts, but it can't give you the synthesized understanding that comes from years of experience.

Digital natives - those who grew up with smartphones - relate to search fundamentally differently than digital immigrants who remember pre-internet life. For older generations, searching feels like a conscious choice. For younger ones, it's reflexive.

This isn't necessarily worse, just different. Digital natives often exhibit superior skills in information navigation, multitasking, and digital literacy. They're building neural pathways optimized for a world where information is abundant and filtering is the critical skill.

But they may lack the deep reading and sustained focus that characterized earlier education. One longitudinal study found that adolescents using screens more than seven hours daily showed higher rates of anxiety and depression. The constant availability of information doesn't just change cognition - it affects mental health.

So what's the verdict? The research suggests digital amnesia represents both evolution and risk. The key is intentionality.

Practice retrieval before searching. When a question arises, pause. Try to recall the answer before reaching for your phone. This simple act strengthens memory pathways and prevents total dependence. Even if you're wrong, the effort of retrieval improves retention when you eventually find the answer.

Distinguish between facts and understanding. Outsourcing factual recall is fine - nobody needs to memorize state capitals anymore. But understanding concepts, principles, and relationships requires internal processing that can't be offloaded. When you read something important, force yourself to explain it in your own words before moving on.

Embrace strategic forgetting. Not everything deserves to be remembered. Your brain is right to prioritize. Make active decisions about what matters enough to internalize versus what can remain external. This turns memory from a passive warehouse into a curated collection.

Build memory deliberately. Use spaced repetition systems for information you genuinely need to master. These apps leverage cognitive science to help you retain what matters without constant reviewing. This frees your Google-enabled brain to focus on novel problems.

Practice digital detox. Periods without search access force your brain back into active memory mode. Even short breaks - leaving your phone behind on a walk, reading without devices nearby - remind your neural networks how to operate independently. Research shows this reduces stress and improves cognitive function.

Cultivate meta-awareness. Notice when you reach for your phone. Is it necessary, or habitual? Simply being aware of how often you offload cognition can help you make conscious choices about when searching serves you versus when it prevents deeper engagement.

Everything discussed so far is about to intensify. Search engines provide information when asked. AI assistants are proactive, anticipating needs before you articulate them. They don't just answer questions - they frame questions, summarize complex topics, and even generate creative content.

This could be cognitive offloading on steroids. Or it could be the next transactive memory upgrade, like moving from handwritten notes to searchable databases. The determining factor will be whether we use these tools to augment cognition or replace it.

Early research on knowledge workers using generative AI suggests both outcomes are possible. Those who treated AI as a collaborative tool - questioning outputs, verifying claims, integrating AI analysis with their own expertise - saw productivity gains without skill loss. Those who passively accepted AI outputs experienced capability decline.

Your relationship with search engines probably won't change dramatically after reading this. You'll still Google things dozens of times a day. But perhaps you'll be more intentional about when and how you search.

The brain you're building is adapted to a world of abundant information. That's appropriate - information is abundant. But don't let adaptation become atrophy. The goal isn't to memorize phone numbers or become a human encyclopedia. It's to preserve the cognitive capabilities that matter: critical thinking, deep comprehension, creative synthesis.

Search engines are tools, not replacements. The question isn't whether you'll use Google - of course you will. The question is whether you'll remain capable of thinking deeply even when Google isn't available.

Because here's the thing about cognitive offloading: it only works if you could still do the task yourself if necessary. Offloading becomes dependency when the tool becomes indispensable. Keep your cognitive muscles active, even as you let technology handle the routine work.

Your brain is learning to navigate information rather than store it. That's fine, as long as navigation doesn't replace comprehension. Use search engines to access the world's knowledge. Just make sure you're still building some knowledge of your own.

The goal isn't to reverse the Google effect - it's to manage it. Think of your brain and your devices as a partnership. Let each handle what they do best, but never fully abdicate the work of thinking to the tools in your pocket.

We're in the middle of a cognitive transition that's just as significant as the shift from oral to literate cultures. The people who'll thrive aren't those who resist technology or embrace it uncritically. They're the ones who understand exactly what they're outsourcing - and what they're keeping for themselves.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

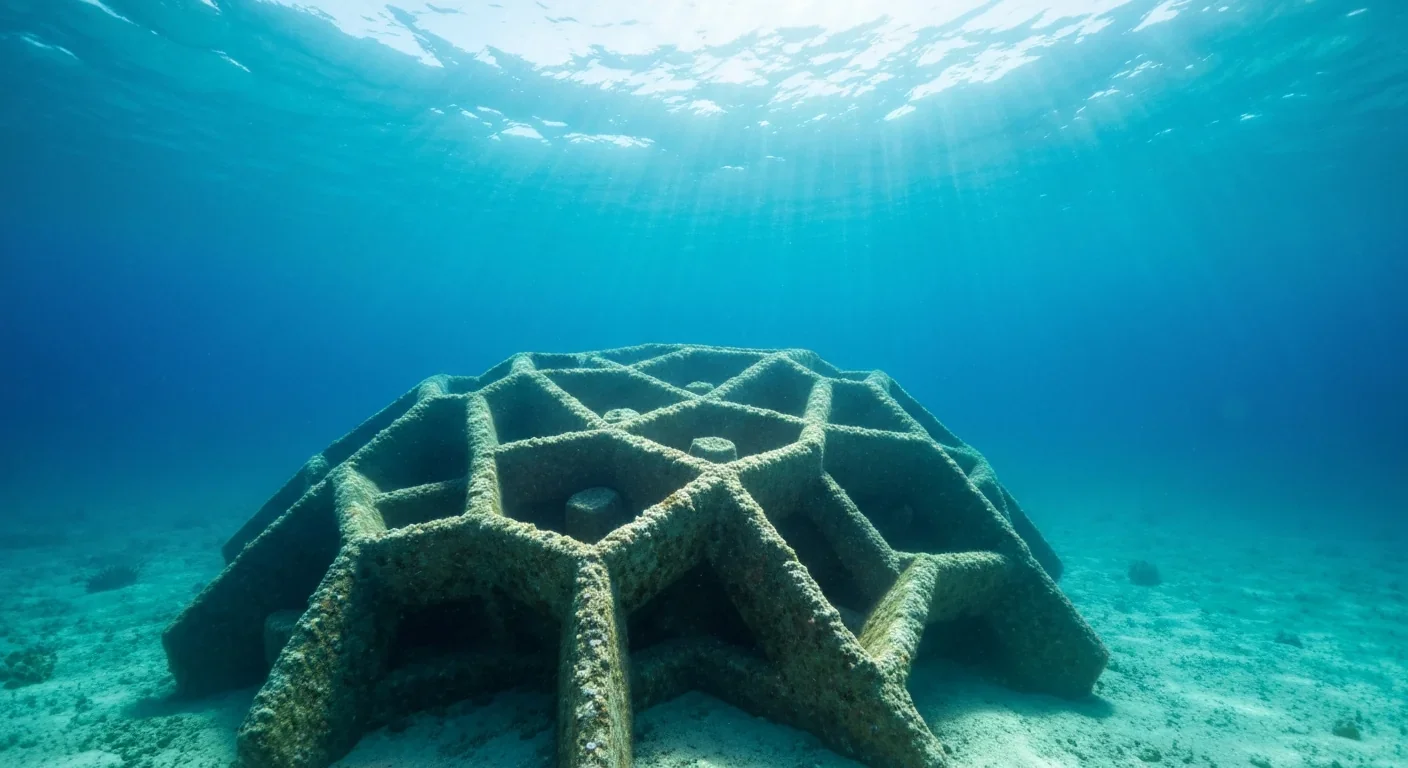

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

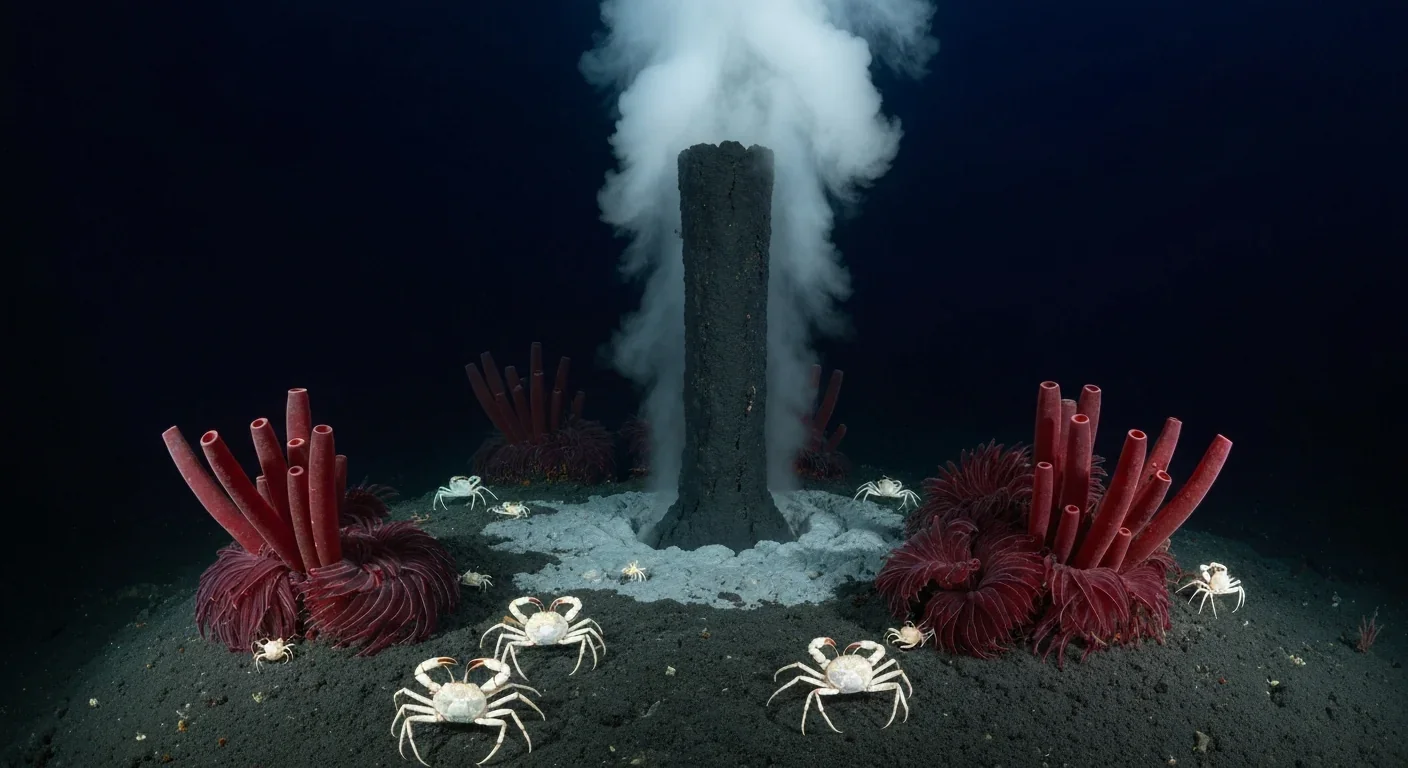

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

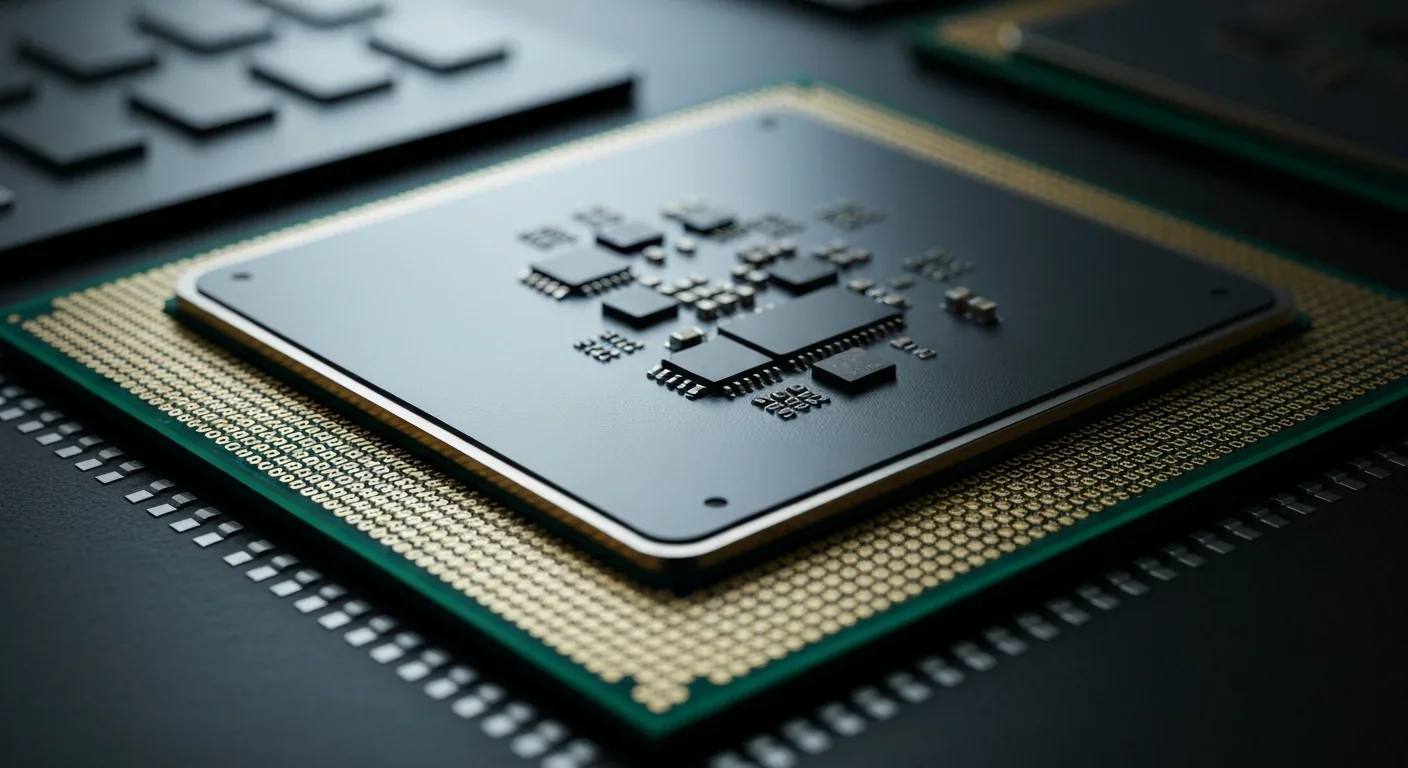

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.