Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Language didn't emerge overnight - it evolved over hundreds of thousands of years through anatomical changes, cognitive breakthroughs, and social pressures. From gesture-based communication to symbolic grammar, the journey involved multiple hominin species, genetic innovations like FOXP2, and cultural exchange. Today, AI is decoding animal languages and revealing that communication is a spectrum, while bilingual brains show us language evolution in real time. Understanding how we learned to speak is reshaping everything from education to medicine, proving that the story of language is still being written - and we're all part of it.

When a million-year-old skull was reclassified in 2025, scientists pushed back the emergence of Homo sapiens by half a million years - and with it, the entire timeline of when we might have first spoken. Language, the trait that defines us more than any other, didn't arrive fully formed. It emerged from a tapestry of gestures, grunts, anatomical accidents, and social pressures spanning hundreds of thousands of years. Today, as artificial intelligence learns to decode whale songs and dolphin clicks, we're discovering that the journey from primitive sounds to symbolic grammar holds lessons not just for understanding our past, but for building our future.

In early 2025, Professor Xijun Ni's team achieved what seemed impossible: they reconstructed a heavily distorted fossil skull using 3D scanning and genetic analysis, reclassifying Yunxian 2 from Homo erectus to early Homo longi. The finding was so unexpected that Ni himself admitted, "From the very beginning, when we got the result, we thought it was unbelievable."

The implications cascaded outward. If Yunxian 2 walked the Earth a million years ago, then early versions of Neanderthals and our own species probably did too. This pushes the window for language emergence back dramatically, extending the period during which three hominin species coexisted to roughly 800,000 years - far longer than previously believed. "Human evolution is like a tree," Ni explained, "there were three major branches that are closely related, and they may have some interbreeding."

That interbreeding matters profoundly. Eurasians today carry approximately 2% Neanderthal nuclear DNA, proof that our ancestors didn't just share territories - they shared genes, culture, and very likely, communication systems. The Neanderthal FOXP2 gene, often called the "speech gene," is identical to ours. When researchers analyzed the hyoid bone from Kebara Cave in Israel - a tiny horseshoe-shaped anchor for tongue and larynx muscles - they found the Neanderthal version looked almost identical to that of modern humans. The evidence is mounting: our cousins could speak.

Before language, our ancestors lived in a world of immediacy - gestures for "here," grunts for "now," cries for "danger." Then something extraordinary happened: humans developed displacement, the ability to talk about things not physically present or that don't even exist. Honeybees can communicate the location of food up to 100 meters away through their waggle dance, but humans can debate philosophy, plan for decades ahead, and tell stories about gods we've never seen.

This leap wasn't sudden. It required anatomical changes - a descended larynx, a flexible tongue, a reconfigured vocal tract capable of shaping dozens of distinct sounds. The human mouth is relatively small, can open and close rapidly, and contains a larger, thicker, muscular tongue than our primate cousins possess. These adaptations didn't happen overnight; they were sculpted by evolutionary pressures spanning hundreds of thousands of years.

But anatomy alone doesn't explain language. The real magic happened in our brains. Broca's area and Wernicke's area, both located in the left hemisphere, became specialized for language production and comprehension. Yet recent research reveals that both hemispheres are necessary for full language understanding - the left dominates syntax and semantics, while the right excels at context, metaphor, irony, and visualization. Language isn't stored in one place; it's a distributed symphony across multiple brain regions.

One compelling hypothesis suggests that language began not with sounds, but with gestures. Early hominins may have communicated through manual signs, much like modern great apes do with grunts and facial expressions - but crucially, without symbolic syntax. The transition from gesture to vocalization likely accelerated when tool use freed up our hands. If your hands are busy shaping stone into blades, you need another channel for communication: your voice.

This shift wasn't just practical - it was cognitive. Eric Gans, founder of generative anthropology, proposed a radical idea: language originated in a singular symbolic event, what he calls "an aborted gesture of appropriation." Imagine early humans reaching for a desired object, then pulling back in recognition of collective danger or sacredness. That halted gesture became the first symbol, a representation standing in for the thing itself. From this originary moment, Gans argues, all culture and ritual emerged - language as a memorial to our first shared symbol.

Whether or not you accept Gans's event-based origin, the central insight holds: symbolic representation marked the boundary between animal communication and human language. Prehistoric cave paintings in Indonesia, dated to at least 51,200 years ago, and burial rituals at Qafzeh involving pierced shells and pigments from around 90,000 years ago, provide tangible evidence that symbolic cognition predates - or at minimum, evolved alongside - complex spoken language.

In April 2025, Stanford researchers published a study in Cell that unveiled a stunning molecular detail about FOXP2, the gene most closely linked to human speech. FOXP2 contains one of the longest known polyglutamine (polyQ) stretches in the human body - more than 40 consecutive repeats - yet it doesn't clump or cause disease. In contrast, pathogenic proteins like those in Huntington's disease form toxic aggregates with as few as 36 repeats.

How does FOXP2 avoid disaster? The team, led by Joanna Wysocka and Shady Saad, discovered two protective mechanisms: DNA binding and phosphorylation during cell division. When FOXP2 binds to DNA to regulate genes, it stays soluble. During mitosis, it gains a negatively charged phosphate coat that prevents it from sticking together. When either function is disrupted - as happens in verbal dyspraxia - the protein begins to clump.

The evolutionary implications are profound. Comparative analysis showed that human FOXP2 is significantly more soluble than versions from chimpanzees or mice. These tweaks may have allowed our brains to ramp up FOXP2 expression without cytotoxicity, enabling the fine motor control over lips, tongue, and jaw necessary for articulate speech. Mice engineered with the human FOXP2 gene make more complex vocalizations and form brain connections more easily - a living experiment in linguistic evolution.

But the story doesn't end there. Researchers fused FOXP2's protective features to the toxic Huntington's protein in laboratory cells and watched as the deadly clumps dissolved. "The study was a completely new approach to target these diseases by addressing the root cause," Saad explained. The very adaptations that gave us speech might now help us combat neurodegenerative diseases.

For decades, linguist Charles Hockett's "design features" served as the gold standard for distinguishing human language from animal communication. He identified sixteen traits - displacement, productivity, cultural transmission, duality of patterning - four of which he believed were uniquely human. Yet recent research is blurring those boundaries.

Bird species like the Southern Pied Babbler and Japanese Tit exhibit duality of patterning, using meaningless sound units (like our phonemes) to build meaningful signals (like our words). Bonobos and chimpanzees demonstrate compositionality, combining calls in versatile ways to expand meaning - a 2025 study published in Science Advances documented this in striking detail. Whale song, analyzed by an international team earlier this year, contains recurring segments arranged in patterns that make them easier to learn, exhibiting language-like statistical structures remarkably similar to ours.

Even more intriguing: cowbirds preferentially use shared song types when singing to male rivals. In a study tracking 12 males across 5,000+ songs, eleven birds showed significant correlation between song usage and how many other males shared those songs in their repertoires. This isn't random - it's selection favoring stable, shared vocalizations, a potential evolutionary pathway from cultural convergence to linguistic convention.

Hockett's features form a spectrum, not a wall. Animals approximate human language through individual features, but the full repertoire - displacement plus productivity plus duality plus cultural transmission - remains distinctly ours. The question shifts from "Do animals have language?" to "How do elements of language-like communication distribute across species?"

Artificial intelligence is now doing what seemed like science fiction a decade ago: decoding animal languages. Google's DolphinGemma, developed with the Wild Dolphin Project and Georgia Institute of Technology, interprets dolphin clicks and whistles to produce realistic responses. Models trained on massive acoustic datasets are uncovering hierarchical, compositional patterns in dolphin and whale vocalizations that mirror early human syntax.

Could AI discern syntax structures in whale song comparable to human grammar? Early evidence suggests yes. Researchers are discovering that humpback calls contain not just repetition, but hierarchy - smaller units nested within larger phrases, recursively structured like human sentences. If validated, this would revolutionize our understanding of animal cognition and provide a living laboratory for testing universal grammatical principles.

The parallels to human language acquisition are striking. Just as infants learn language through social feedback, marmosets - one of the few primate species besides humans to exhibit rapid brain growth around birth - acquire adult-like calls more quickly when caregivers respond frequently. Both species experience significant neurodevelopment in highly interactive social environments. The synergy between biology and social context appears essential for vocal learning, whether in human babies or chattering marmosets.

If you want to see language evolution in action, look at a bilingual brain. Proficient bilinguals can switch languages within 200 to 300 milliseconds - faster than the blink of an eye. This speed comes from myelination, the insulation of frequently used neural pathways that makes signal transmission nearly instantaneous.

But bilingualism doesn't just make you faster; it changes your brain's structure. Research shows that early bilinguals have increased gray matter in Broca's and Wernicke's areas and a thicker anterior cingulate cortex, the region responsible for executive control. "Being bilingual for a long time can actually change the shape of your brain!" scientists discovered, observing measurable differences in brain scans.

The functional benefits extend beyond structure. Bilinguals perform better on tasks requiring them to ignore distractions, make more rational decisions when speaking in their non-native language (because emotional bias is reduced), and report feeling like slightly different people depending on which language they speak - a testament to how deeply language intertwines with identity and cognition.

Bilingual infants show stronger brain responses to speech sounds than monolingual peers, even before they can speak. A 2016 study by McElroy found that babies bilingual in Spanish and English had enhanced neural activation compared to English-only infants, suggesting that early exposure to multiple linguistic systems tunes the brain for flexibility and processing power.

This plasticity offers a living model for how new linguistic systems integrate into existing neural architectures - a glimpse, perhaps, into how our ancestors' brains adapted as language complexity increased.

How did the intricate rules of grammar emerge? Two camps offer competing visions. Noam Chomsky's Universal Grammar (UG) posits an innate language acquisition device hardwired into human genetics - a biological mutation that occurred, perhaps, in one or two generations of early Homo sapiens, endowing us with the capacity for infinite creative expression.

The opposing view, championed by usage-based theorists like Michael Tomasello and Joan Bybee, argues that grammatical structure is emergent - built from frequency, interaction, and culture rather than genetic endowment. Children don't arrive with grammar pre-installed; they construct it through usage patterns, social feedback, and pragmatic learning. "Usage-based approaches provide a more accurate account of language acquisition than Universal Grammar," a 2019 review concluded, citing empirical studies showing that frequency effects and interactional learning shape linguistic competence.

The debate isn't settled, but the evidence increasingly favors a middle path: some universal constraints (perhaps related to cognitive architecture or sensorimotor integration) combined with massive cultural variation and usage-driven learning. Language is both biological and cultural, both innate and learned - a hybrid system reflecting our dual nature as evolved animals and cultural beings.

Recent neuroscience reveals that understanding language isn't just an abstract process - it's deeply embodied. When you read the word "kick," your motor cortex activates as if preparing to kick. When you hear "grasp," regions controlling hand movement light up. This isn't metaphorical; it's measurable.

Magnetoencephalography (MEG) studies show that language-induced sensorimotor reactivations occur both early (80 - 200 ms after stimulus) and late (300+ ms), indicating that embodied systems function as both primary and post-conceptual mechanisms. In other words, your brain simulates the physical experience of words as part of understanding them - a process likely rooted in the mirror neuron system, which couples action observation with execution.

This has profound implications for language evolution. If language comprehension relies on multimodal and embodied brain networks, then early language may have co-evolved with sensorimotor integration systems. Gesture and action weren't just stepping stones to speech - they remain foundational to how we process meaning.

The "simulation theory" of language grounding suggests that when our ancestors first learned to map vocalizations onto gestures and actions, they were building the neural infrastructure that would eventually support abstract symbolic thought. Language didn't replace gesture; it layered onto it, creating a distributed, flexible system capable of representing everything from concrete actions to metaphysical concepts.

We can't hear ancient voices, but we can read their echoes in artifacts. The earliest non-runic attestation of Proto-Germanic may be the Negau helmet inscription, dated to the 2nd century BCE, argued by some to represent the earliest evidence of Grimm's law - a systematic sound shift marking the divergence of Germanic from other Indo-European languages. Early runic inscriptions like those from Vimose in Denmark (2nd century CE) and references in Tacitus's Germania (circa 90 CE) provide tangible records bridging oral tradition and written language.

These fragments are invaluable because direct evidence of ancient speech is impossible to obtain. Proto-Germanic, for instance, is entirely reconstructed using the comparative method - analyzing more archaic Indo-European languages, early Germanic loanwords in Baltic and Finnish, and the few inscriptions that survive. Every reconstruction is a hypothesis, a best guess informed by patterns we observe across related languages.

Further back, the trail grows fainter. Symbolic art - like the 51,200-year-old paintings in Indonesia and 90,000-year-old burial rituals at Qafzeh - suggests symbolic cognition, but not necessarily grammar. Did these early humans possess full syntactic language, or were they communicating through simpler symbolic systems? We don't know. What we do know is that symbolic representation, the foundation of language, was firmly in place tens of thousands of years before writing.

The insights from language evolution aren't just academic - they're reshaping how we build artificial intelligence and teach children. Natural language processing models like GPT and BERT are trained on massive datasets, learning statistical patterns much like usage-based theories propose children do. Yet they lack embodiment, social context, and the sensorimotor grounding that human brains use to anchor meaning. This is why AI can generate fluent text but struggles with common-sense reasoning and physical understanding.

Researchers are now exploring embodied AI - systems that learn language through interaction with simulated or real environments, much like infants do. By grounding words in actions and sensory experiences, these systems aim to replicate the multimodal networks that make human language robust and flexible.

In education, understanding the role of caregiver interaction and social feedback is transforming early childhood language programs. Studies like those by Hurtado and Weisleder show that the quantity of caregiver talk correlates directly with processing speed and vocabulary growth in toddlers. The implication is clear: early, rich linguistic engagement tunes the brain for lifelong learning.

Bilingual education is being reimagined not as a linguistic luxury but as a cognitive advantage. The structural brain changes, enhanced executive function, and improved attention that bilingualism confers are now recognized as valuable developmental outcomes, prompting schools worldwide to adopt immersive dual-language programs.

Perhaps the most unexpected application of language evolution research is in medicine. The discovery that FOXP2's anti-clumping mechanisms - DNA binding and phosphorylation - protect it from aggregation has opened a new frontier in treating neurodegenerative diseases. By attaching DNA-binding tags or phosphate mimics to the toxic Huntington's protein, researchers reduced or dissolved amyloid aggregates in laboratory cells.

This approach could extend beyond Huntington's to other polyQ-expansion disorders and protein-aggregation diseases like Alzheimer's and Parkinson's. The dual strategies that FOXP2 evolved to enable speech - keeping proteins soluble while allowing high expression - may become therapeutic tools for conditions that have plagued humanity for millennia.

The irony is poetic: the gene that gave us the power to name our suffering may also help us cure it.

As we stand in 2025, language evolution has entered a new chapter. We're not just studying how language emerged; we're creating new forms of it. AI systems are learning to translate between human languages, decode animal communication, and even generate novel linguistic structures. Within the next decade, you'll likely interact daily with AI that understands context, nuance, and emotion at near-human levels.

But this technological leap raises profound questions. If AI can master language without a body, without social interaction, without the evolutionary pressures that shaped human speech, what does that say about the nature of language itself? Is language fundamentally symbolic processing, or is it inseparable from embodied experience?

The answer may redefine what it means to communicate. As we decode whale songs and dolphin dialects, we may discover that intelligence and communication are far more diverse than we imagined - that the boundary we drew around human language was not a wall but a gradient, and that we share this gradient with other minds on Earth and, perhaps someday, beyond.

The journey from grunts to grammar wasn't linear or simple. It involved anatomical accidents (a descended larynx), cognitive breakthroughs (symbolic representation), social pressures (the need for cooperation and culture), and genetic innovations (FOXP2's solubility). It unfolded over hundreds of thousands of years, across multiple hominin species, through interbreeding, cultural exchange, and endless experimentation.

What makes this story so powerful is that it's still being written. Every bilingual child rewiring their brain, every AI model learning syntax from data, every researcher decoding animal communication - they're all participants in the ongoing evolution of language. We didn't conquer the world alone, and we didn't invent language in isolation. It emerged from our interactions with each other, with our environment, and with the deep evolutionary forces that shape all life.

As we look ahead, the challenge isn't just to understand where language came from, but to steward where it's going. In an age where AI can generate text, translate speech, and even simulate conversation, the question isn't whether machines can use language - it's whether we can preserve the embodied, social, deeply human qualities that make language more than just information transfer. Language is connection. It's culture. It's memory and imagination intertwined. And that, no matter how sophisticated our technology becomes, is worth protecting.

The million-year-old skull that rewrote our timeline reminds us that our story is longer, stranger, and more interconnected than we ever suspected. From the first symbolic gesture to the latest AI breakthrough, language remains our most powerful tool - not because it lets us speak, but because it lets us understand each other, and in doing so, understand ourselves.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

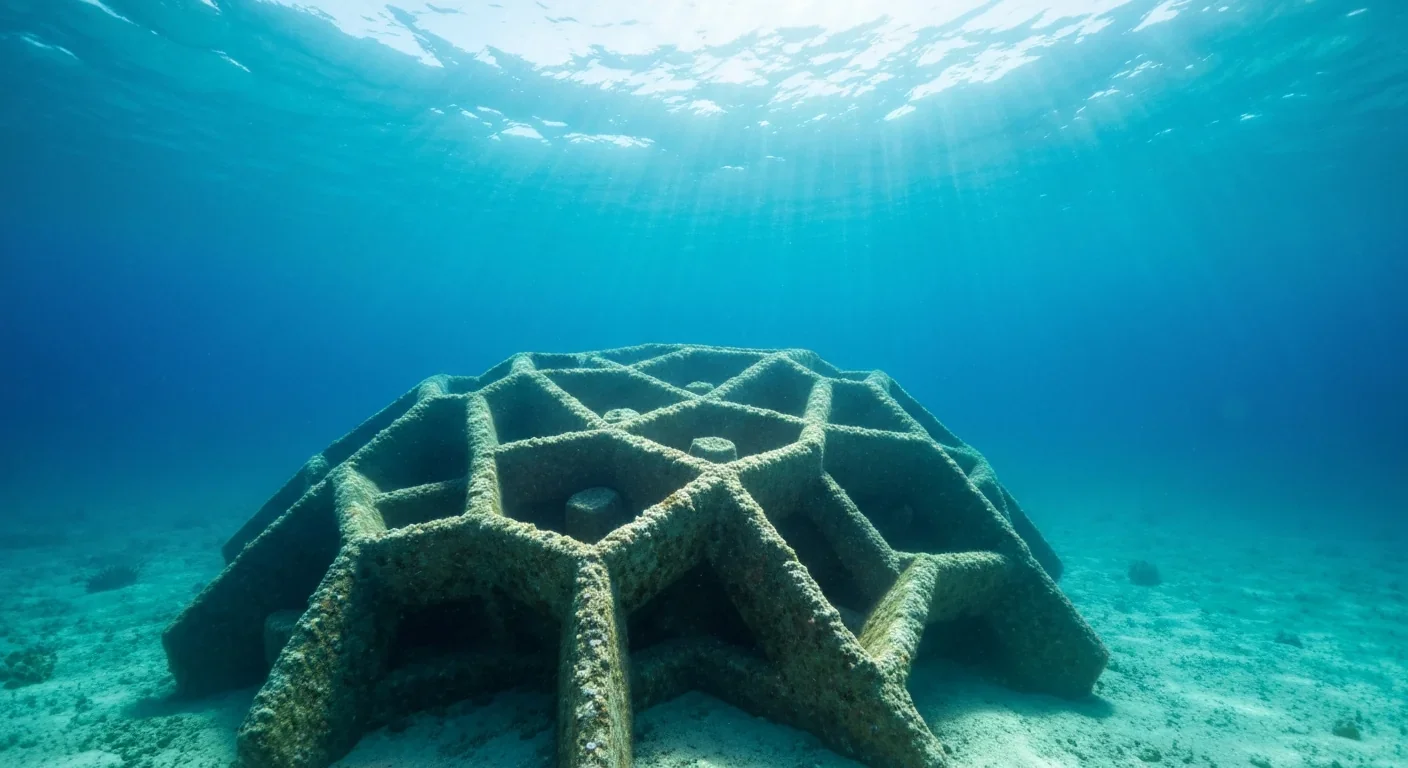

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

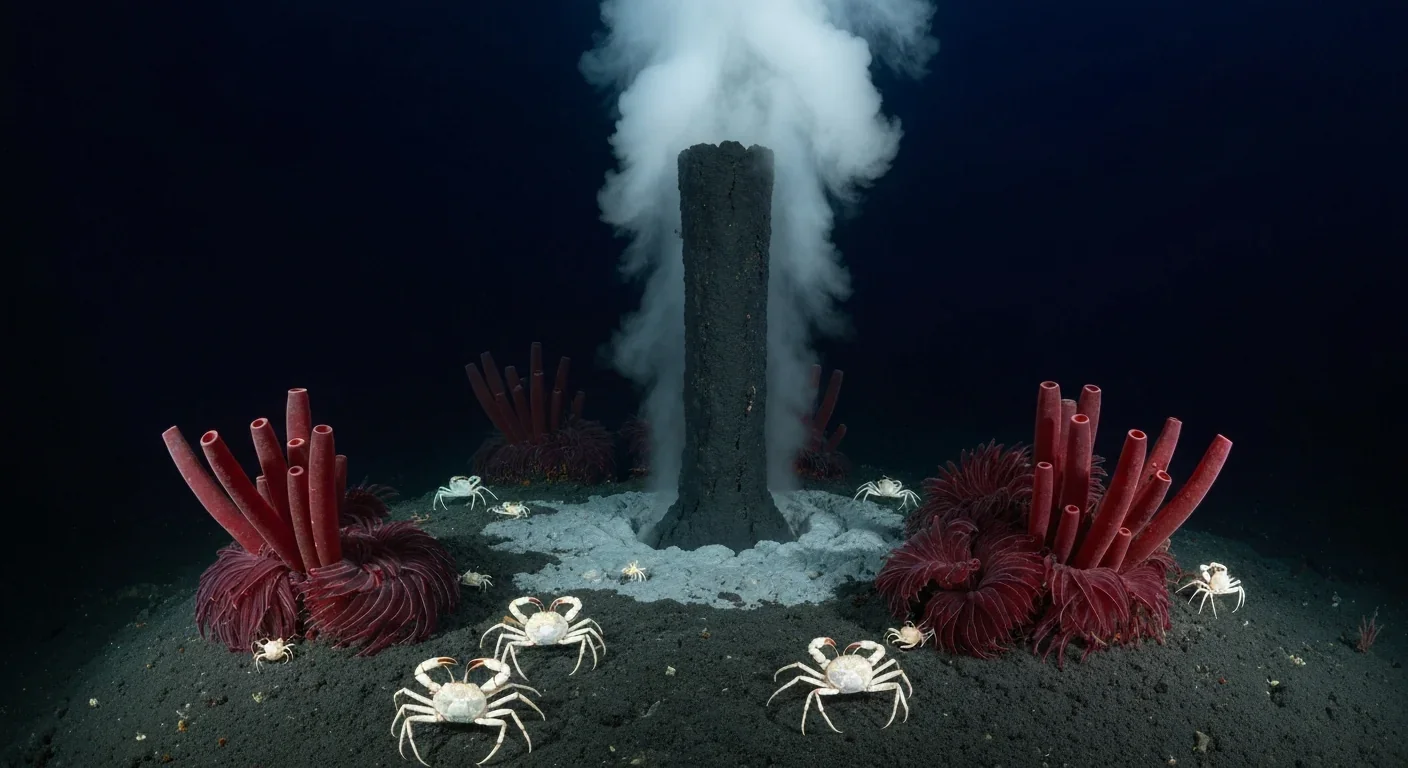

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

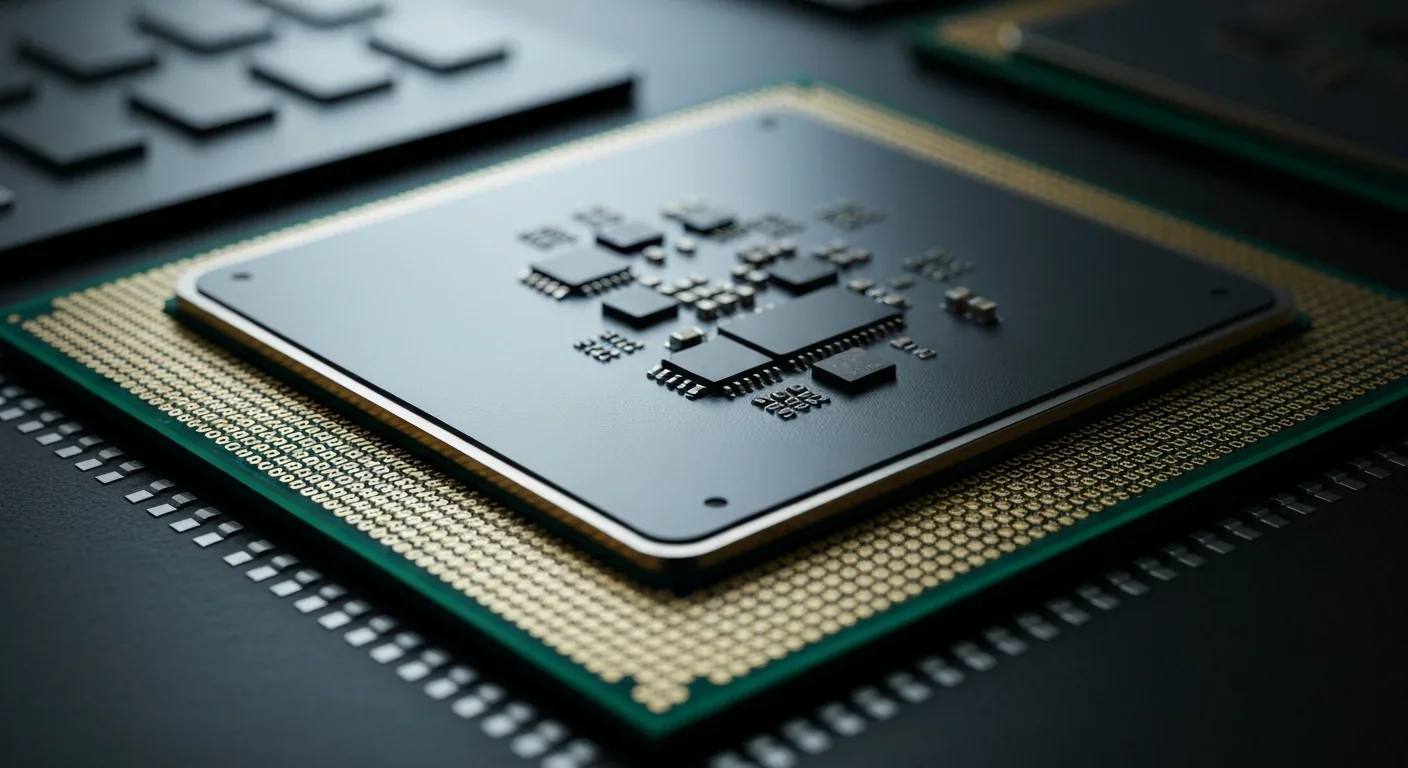

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.