Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Anyone can learn echolocation in just 10 weeks, rewiring their brain to process spatial information through sound. This teachable skill, once thought exclusive to gifted blind individuals, reveals profound insights about human neuroplasticity and sensory potential.

Picture this: you're standing in a darkened room with your eyes closed, making clicking sounds with your tongue. Within moments, the space around you reveals itself through echoes bouncing back, painting a sonic picture of walls, doorways, furniture. This isn't science fiction or animal mimicry. It's human echolocation, and researchers now know that virtually anyone can learn it in just ten weeks.

The ability to "see" with sound has moved from the realm of extraordinary individual talent to teachable skill, complete with brain-imaging studies that reveal how our neural wiring transforms when we train this hidden sense. What started as observation of remarkable blind individuals has become a window into one of neuroscience's most profound discoveries: our brains are far more adaptable than we ever imagined.

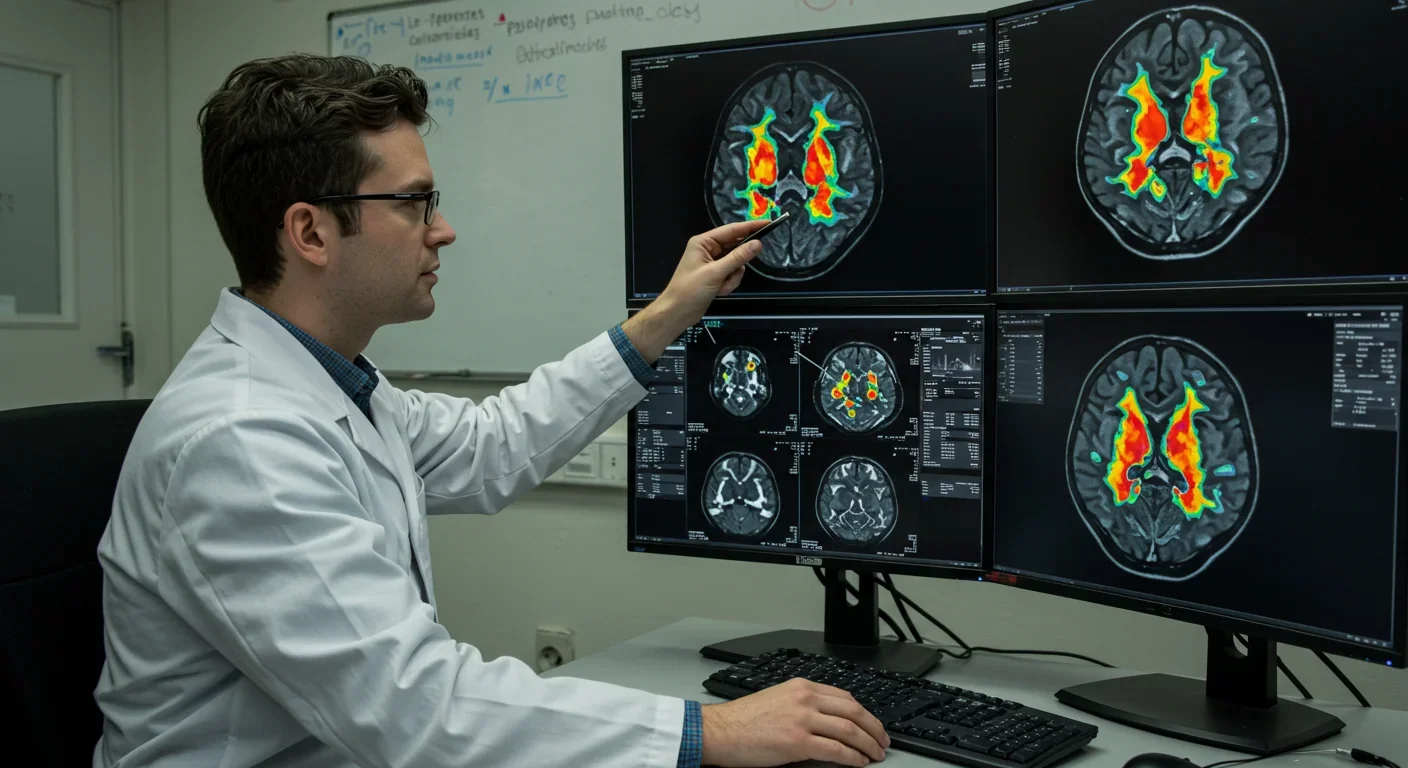

When neuroscientist Lore Thaler at Durham University set out to teach echolocation to both blind and sighted adults, she expected the blind participants to show brain changes. The surprise came when sighted volunteers displayed the exact same neuroplastic transformation. After just 10 weeks of training, two to three hours twice weekly, both groups showed their visual cortex lighting up in response to sound.

Think about that for a second. The part of your brain dedicated to processing images from your eyes can learn to process spatial information from echoes instead. It's as if your brain's operating system realizes it has hardware sitting idle and decides to repurpose it for a different input stream.

The training itself involved three core tasks: judging the size or orientation of objects using echoes, and navigating virtual mazes with simulated click-plus-echo sounds tied to their positions. Participants generated mouth clicks and listened carefully to how those sounds bounced back. By week ten, most could identify objects and navigate spaces they'd never seen.

Follow-up surveys revealed something even more compelling: 83% of blind participants reported improvements in independence and wellbeing three months after completing training. That's not just a cognitive party trick. That's a measurable enhancement to quality of life.

Human echolocation isn't new. Researchers have documented it since the 1950s, but early studies treated it as an isolated phenomenon, something only a handful of extraordinarily gifted blind people could do. Daniel Kish, who lost both eyes to cancer before his first birthday, became one of the most prominent practitioners and teachers of what he calls "FlashSonar."

Kish's approach combines tongue clicks with traditional white cane techniques, enabling him and his students to mountain bike, hike wilderness trails, and navigate complex urban environments. His descriptions of the experience are striking: "The sense of imagery is very rich for an experienced user. One can get a sense of beauty or starkness or whatever from sound as well as echo."

What shifted the field was neuroimaging technology. A 2014 fMRI study by Thaler and colleagues made recordings of clicks and their faint echoes using tiny microphones placed in blind echolocators' ears. When researchers played those recordings back while scanning the participants' brains, they discovered something remarkable: the primary visual cortex activated during echolocation, not just in adjacent processing areas, but in V1 itself, the brain region that normally receives direct input from the optic nerve.

The finding challenged conventional wisdom about how rigidly our sensory systems are organized. For decades, neuroscientists believed that primary sensory regions were wholly sense-specific. Vision happened in visual cortex, hearing in auditory cortex, and never the twain shall meet. Echolocation research shattered that model.

So how exactly does it work? When you produce a sharp sound like a tongue click, that acoustic pulse travels outward until it hits an object. Some energy absorbs into the material, but some bounces back as an echo. The time delay between click and echo tells you distance. The quality and timbre of the returning sound reveals texture, density, and material properties. Two ears receive slightly different echo patterns, providing stereo information about location and size.

Tongue clicks generate high-frequency, brief pulses that are acoustically optimized for generating clear echoes, especially in cluttered indoor environments. The click is sharp enough to create distinct echoes but doesn't last so long that it muddles the returning signal. It's precisely the kind of sound evolution gave bats and dolphins, and it turns out human mouths can produce it just fine.

Your brain does the heavy computational lifting. It filters out the outgoing click and focuses on incoming echoes, measuring time delays down to milliseconds. Experienced echolocators can detect objects as small as a coin at several feet, distinguish between materials like wood, metal, or fabric, and create detailed mental maps of room layouts.

Interestingly, sighted people often experience echo suppression, a perceptual phenomenon where the brain automatically filters out acoustic reflections to prevent confusion. We walk around in echo-rich environments all the time and typically don't notice. Training in echolocation essentially teaches your brain to turn off that suppression filter and pay attention to information that was always there, just ignored.

The good news: you don't need to be blind to learn echolocation. The better news: you don't need years of practice. Structured training programs have demonstrated that measurable skill develops in just weeks.

Start simple. Find a quiet room and practice producing consistent tongue clicks. The sound should be sharp and crisp. Stand facing a wall at varying distances and click, paying attention to the subtle echo. Most people can't hear it at first because their brain hasn't learned what to listen for. Patience matters here.

Once you can reliably detect a wall, experiment with different surfaces. A concrete wall returns a different echo quality than drywall or wood paneling. Move closer and farther, noting how the echo changes. This builds your acoustic reference library.

Next, introduce object detection. Place a large flat object like a clipboard or book in front of you at arm's length. Click and notice the echo. Move it closer, farther, to the left, to the right. Your brain starts associating echo characteristics with spatial positions. Gradually reduce object size and increase complexity.

Organizations like World Access for the Blind offer formal training programs that combine these progressive exercises with mobility training. The curriculum typically includes indoor navigation, outdoor orientation, and eventually activities like hiking or cycling. The key is consistent practice with increasing difficulty, just like learning any perceptual skill.

Technology is making training more accessible. Some programs use virtual maze navigation with simulated click-echo sounds, allowing remote learners to develop skills before applying them in physical spaces. Audio feedback systems can highlight subtle echo features that beginners might miss, accelerating the learning curve.

For blind and visually impaired individuals, echolocation represents genuine independence. Traditional mobility aids like white canes and guide dogs remain essential, but echolocation adds a complementary layer of spatial awareness. Where a cane detects obstacles at ground level through touch, echolocation reveals hanging branches, overhangs, approaching vehicles, and the overall layout of unfamiliar spaces.

The psychological impact shouldn't be underestimated. Studies following training participants consistently report increased confidence, reduced anxiety in navigation, and greater willingness to explore new environments. When you can perceive your surroundings independently, even imperfectly, it fundamentally changes your relationship with physical space.

Beyond visual impairment, echolocation has niche applications in specialized fields. Search and rescue personnel working in smoke-filled buildings or collapsed structures sometimes train in basic echolocation to supplement their equipment. Cave explorers and spelunkers use it to map passages before committing to tight squeezes. Some military programs have explored it for situations where visual equipment might fail or reveal position.

The educational value extends to anyone interested in perception and neuroscience. Learning echolocation provides direct, visceral experience of neuroplasticity. You literally feel your brain rewiring as novel sensations become interpretable information. It's one thing to read about the brain's adaptability in a textbook, quite another to experience it firsthand.

While human echolocation relies on biology and training, technology is beginning to augment and enhance the capability. Several research teams are developing sensory substitution devices that convert visual information into sound patterns, though these differ from traditional echolocation.

More directly relevant are tools that optimize the echolocation process itself. Specialized microphones can capture and amplify faint echoes, making them easier to learn from. Audio processing software can isolate echo frequencies and present them more clearly to novice learners. Think of it as training wheels for your auditory system.

Some experimental systems generate optimized click sounds through small speakers, producing more consistent acoustic pulses than tongue clicks. While purists argue this defeats the self-contained nature of echolocation, it could help people with speech or motor difficulties participate in training.

The most intriguing development involves real-time spatial audio rendering. Researchers are creating augmented reality systems that simulate echolocation in virtual environments, allowing learners to practice in controlled, progressively challenging scenarios. You could train in a virtual warehouse or forest before attempting the real thing, building skills with zero physical risk.

Looking further ahead, brain-computer interfaces might eventually allow direct communication between echolocation processing and assistive technology. Imagine a system that detects what your brain is trying to perceive from echoes and provides subtle enhancement or confirmation. We're nowhere near that yet, but the foundational neuroscience is being mapped.

Here's where it gets philosophical. Echolocation challenges our assumptions about human sensory limits. We tend to think of our perceptual apparatus as fixed: two eyes, two ears, standard processing. But the reality is far more fluid. The brain's primary sensory regions aren't dedicated to specific inputs so much as they're specialized for certain types of information processing that usually come from particular senses.

Your visual cortex is optimized for spatial analysis and pattern recognition. Normally it receives visual data, but it turns out acoustic spatial data works just fine once proper connections form. The hardware is flexible. The labels we put on brain regions reflect typical usage, not hardwired constraints.

This has profound implications beyond echolocation. It suggests human sensory and cognitive enhancement might work through training and neuroplasticity as much as through technology. We might be surrounded by information our brains could learn to process if only we knew how to train them.

Other research supports this view. Studies of sensory substitution show blind individuals can learn to interpret camera feeds converted to soundscapes, essentially seeing through their ears using completely novel encoding schemes their brains never evolved to handle. Neuroplasticity operates at a level of abstraction that separates information from its carrier medium.

What comes next likely involves hybrid approaches. Pure biological echolocation will remain valuable for its simplicity and independence from technology. But augmented versions that combine human perception with computational assistance could push capabilities far beyond current limits.

Imagine wearable systems that enhance your natural echolocation by generating optimized acoustic pulses at frequencies humans can't produce, then pitch-shifting the echoes into our hearing range. You'd perceive detail and distance impossible with tongue clicks alone, while still using your brain's evolved spatial processing.

Machine learning could analyze echo patterns too subtle or fast for human perception, then present simplified versions that our brains can interpret. The AI doesn't replace your perception but acts as a preprocessor, highlighting features that matter most for navigation or object identification.

Integration with other sensory enhancement technologies creates even more possibilities. Systems combining echolocation with tactile feedback, spatial audio, and visual augmentation could produce multimodal environmental awareness far exceeding any single sense. You'd navigate with redundant, complementary information streams, robust to any single mode's failure.

The medical applications deserve attention too. Understanding how echolocation training induces neuroplastic changes might inform rehabilitation for stroke, traumatic brain injury, or neurodegenerative disease. If we can teach visual cortex to process sound in healthy adults, perhaps we can encourage damaged brain regions to take on new roles during recovery.

As echolocation training becomes more widespread, interesting cultural questions emerge. Should it be part of standard education for blind children, taught as early as orientation and mobility training with canes? Most experts say yes, though implementation remains limited by instructor availability and educational system inertia.

What about sighted children? Some argue basic echolocation should be universal education, a perceptual literacy everyone acquires like swimming or riding a bike. Others worry about adding to already packed curricula for minimal practical benefit to sighted individuals. The debate continues.

There's also the question of standardization versus diversity in training methods. Daniel Kish's FlashSonar emphasizes independent exploration and risk-taking, sometimes controversial when applied to children. Other programs take more cautious, structured approaches. Different cultures and educational philosophies produce different training methodologies, and it's not clear one approach is universally superior.

Rights and expectations matter too. As echolocation becomes recognized as a legitimate mobility technique, should public spaces be designed to support it? Overly sound-absorbent materials in architecture can create "acoustic dead zones" where echolocation fails. Should building codes consider this the way they consider wheelchair accessibility?

The most remarkable aspect of human echolocation might be how ordinary it is. This isn't a superhuman ability or genetic gift. It's a trainable skill using perceptual machinery we all possess. The seeming magic comes from neuroplasticity, the brain's fundamental flexibility that operates in every learning process, from language acquisition to playing guitar.

What echolocation reveals is that the boundaries of human perception are more negotiable than we assume. We walk around with tremendous untapped potential in our standard-issue brains, waiting for the right training to unlock it. Sound can become sight not through technology alone but through the remarkable adaptability of neural tissue.

For anyone curious about their own perceptual capabilities, basic echolocation makes an accessible experiment. You don't need special equipment or rare talent. Find a quiet room, start clicking, and pay attention to what comes back. Your brain might surprise you with what it can learn to hear.

The broader lesson extends beyond navigation or sensory substitution. It's about human potential and the plasticity that makes our species so adaptable. We're not locked into fixed ways of experiencing the world. With understanding, training, and persistence, we can expand our sensory horizons in ways that would have seemed impossible a generation ago.

The blind echolocators who pioneered these techniques didn't just develop a compensatory skill. They revealed something fundamental about human neurobiology that's now transforming how we understand perception, learning, and the improvable nature of human capability. That insight echoes far beyond any individual's navigation, resonating through education, medicine, and our understanding of what it means to sense and make sense of the world around us.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

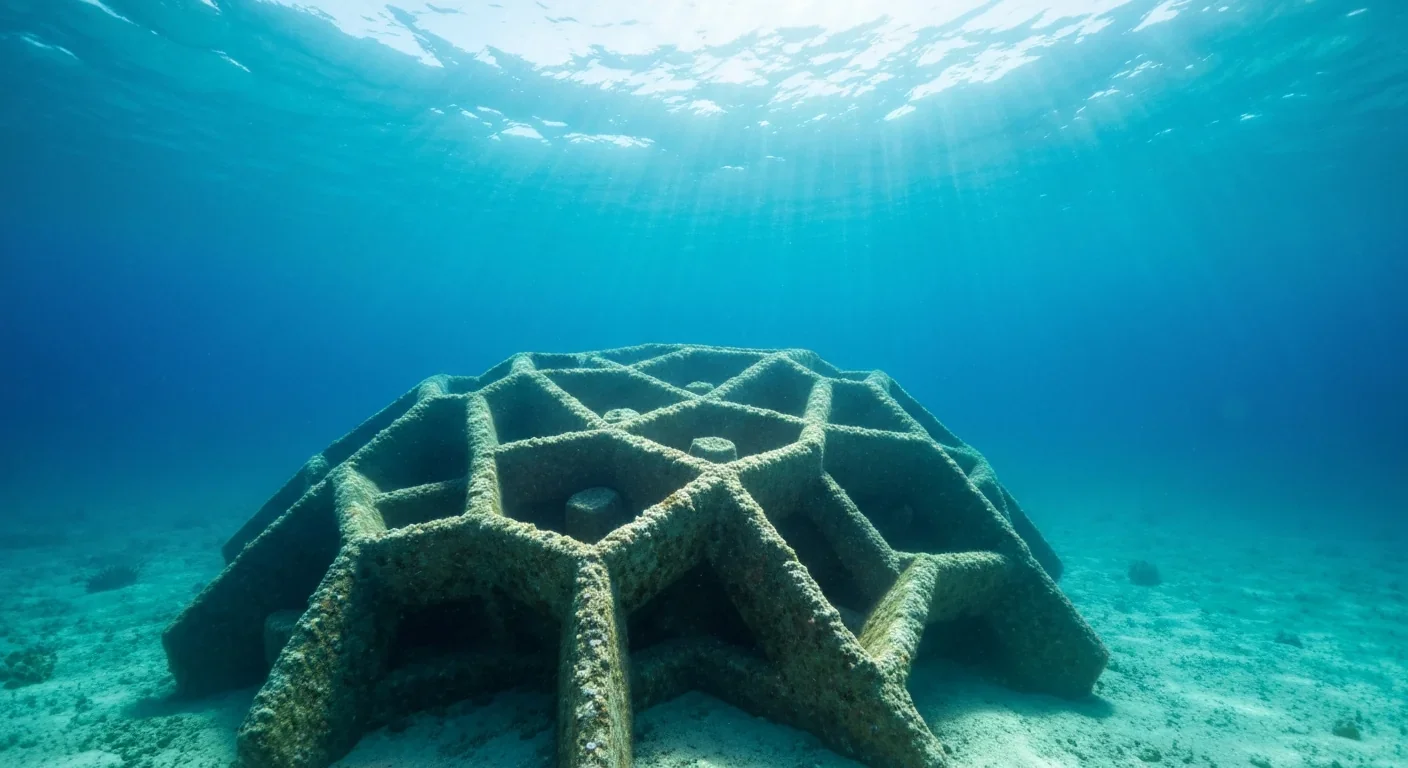

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

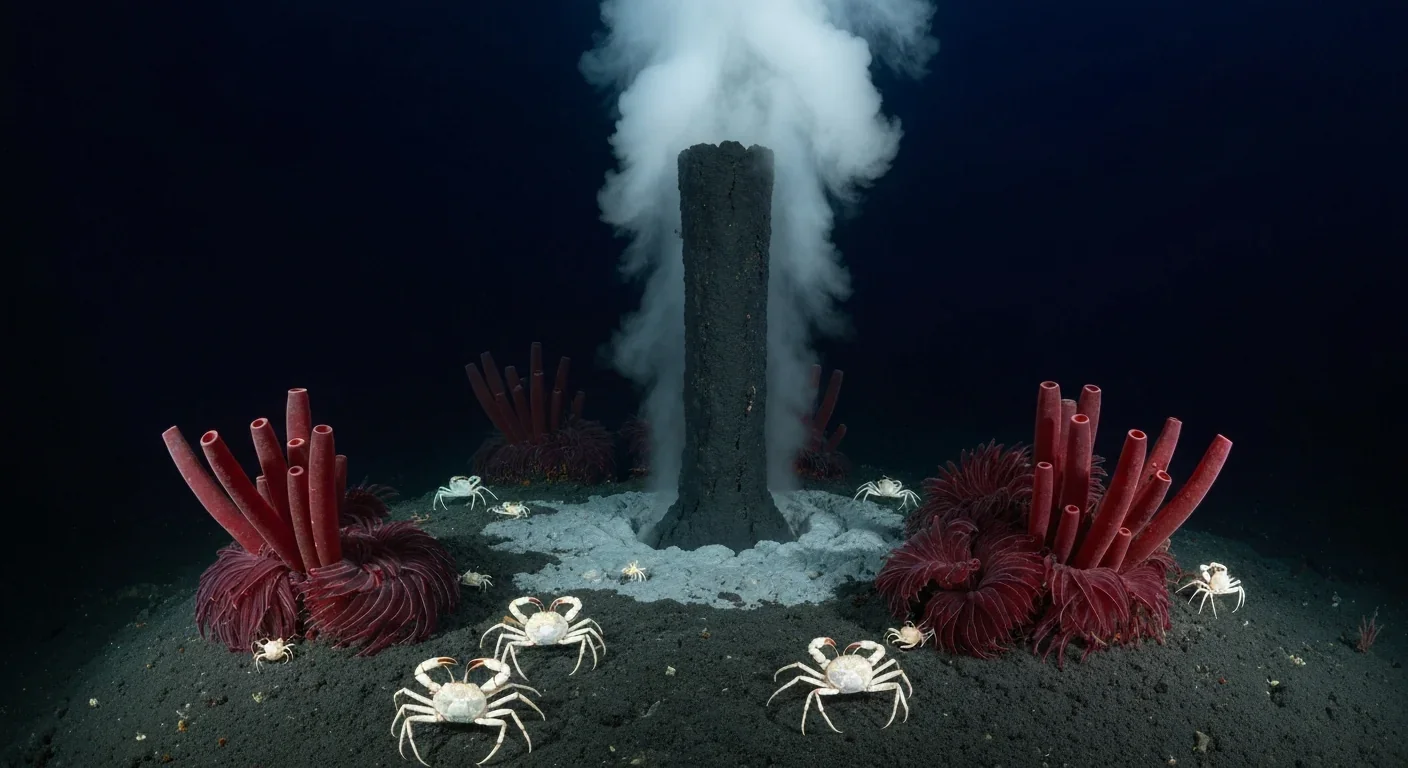

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

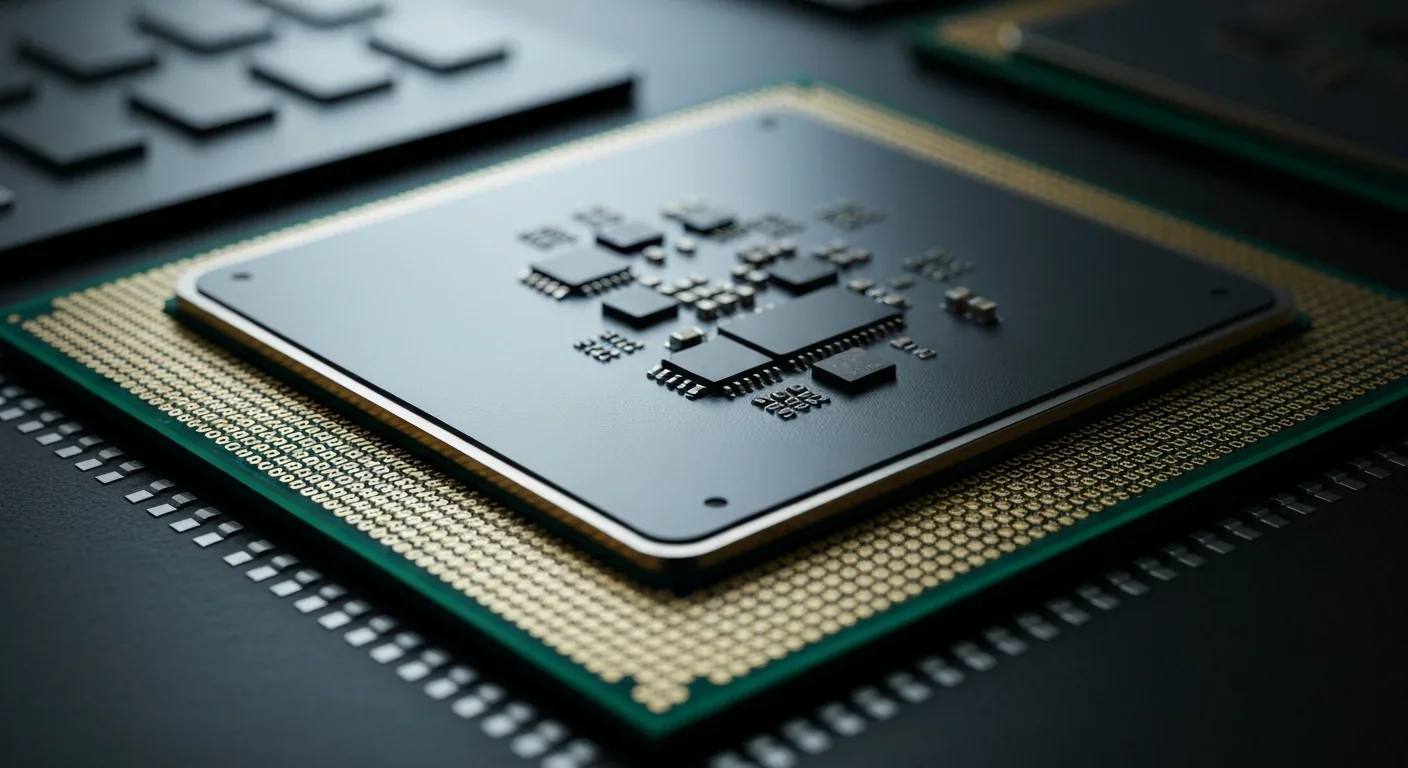

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.