Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Moral dumbfounding reveals why we often feel strongly that something is wrong yet can't explain why. Research shows our gut reactions drive moral judgments first, with reasoning following to defend what we already feel - a phenomenon reshaping how we understand ethics, law, and decision-making.

You've felt it before. Someone describes a scenario, your stomach turns, you know it's wrong. But when pressed to explain why, you fumble. The reasons you offer fall apart under scrutiny, yet the gut-level revulsion remains. Welcome to moral dumbfounding, one of psychology's most fascinating puzzles.

Imagine two adult siblings who decide, just once, to sleep together. They use protection. No one gets hurt. No one finds out. They both agree it was fine, but decide never to do it again. Now, here's the question: was what they did wrong?

Most people say yes immediately. But when researchers ask them to explain why, the answers crumble. "Because... it could lead to genetic problems." (They used protection.) "Because it damages their relationship." (They both said it was fine.) "Because... I don't know, it just is wrong."

That last response is moral dumbfounding in action. Psychologist Jonathan Haidt coined the term in his groundbreaking 2001 study, "The Emotional Dog and Its Rational Tail," which has been cited over 7,800 times. His core insight turned moral philosophy on its head: we don't reason our way to moral conclusions. We feel our way there, then scramble to justify what we already believe.

Think of your mind as having two systems. System 1 operates automatically, fast, and emotionally. It's the voice that screams "danger!" when you see a snake, or "disgusting!" when you smell rotten food. System 2 is slower, deliberate, and logical. It's what you use to solve math problems or plan your taxes.

When you encounter a moral dilemma, System 1 fires first. It delivers an instant verdict based on deep-seated intuitions shaped by evolution, culture, and personal experience. System 2 kicks in afterward, not to question the verdict, but to defend it. As one researcher put it, reasoning is the dog's tail, wagged by the emotional dog.

This dual-process framework, developed by Nobel Prize winner Daniel Kahneman, explains why moral arguments so rarely change minds. You're not really debating principles; you're defending a conclusion your brain reached in milliseconds.

Haidt's original studies used what psychologists call "harmless taboo violations," scenarios designed to trigger disgust without actual harm. Beyond the sibling example, participants were asked about eating a dead pet dog (the family's, already deceased, cooked thoroughly), or cleaning a toilet with the national flag (then washing it afterward).

Every scenario was carefully constructed to eliminate rational objections. No one suffered. No laws were broken. No consequences followed. Yet participants consistently condemned the acts, and when their reasons were systematically dismantled, they'd shrug and say, "I don't know, I can't explain it, I just know it's wrong."

More recent experiments have pushed this further. Researchers created a real-life trolley problem where participants had to decide who would receive painful electric shocks. The twist? Participants made the choice twice.

What happened was striking. Most people reversed their decision the second time. The reason? Context. After witnessing the suffering they'd caused in round one, their moral intuition shifted. As the researchers noted, "knowing their first decision told us nothing about what they might choose the second time."

The same act felt right in one context and wrong in another, like a musical note that's harmonious in one melody and discordant in the next.

Evolution didn't design us to be moral philosophers. It designed us to survive in social groups where quick judgments about right and wrong could mean the difference between acceptance and exile, cooperation and conflict.

Disgust originally evolved as a pathogen-avoidance mechanism. If something smelled rotten, you didn't eat it. Simple. But somewhere along the evolutionary timeline, this system got repurposed for social regulation. Behaviors that threaten group cohesion now trigger the same visceral reaction as spoiled meat.

Haidt's Moral Foundations Theory identifies at least five innate moral systems: Care, Fairness, Loyalty, Authority, and Sanctity. Different cultures weight these differently. Liberals tend to prioritize Care and Fairness; conservatives endorse all five more equally. But everyone relies on intuitive judgments first, reasoning second.

This explains why political debates feel so futile. You're not arguing facts; you're defending intuitions that feel as real as the ground beneath your feet.

Moral dumbfounding isn't just a laboratory curiosity. It shapes courtrooms, social media mobs, and workplace ethics in profound ways.

Jurors are supposed to weigh evidence rationally, but their gut reactions to defendants, whether based on appearance, demeanor, or social identity, often determine verdicts. Research shows that judgments of people intersect with judgments of actions: the same crime can be viewed more or less harshly depending on who committed it.

When a judge instructs a jury to disregard inadmissible evidence, that's asking System 2 to override System 1. It rarely works. The emotional dog has already made up its mind.

Why do Twitter pile-ons feel so righteous? Because outrage is a moral emotion, and moral emotions don't require justification. Someone posts something that feels wrong, and within minutes, thousands of people are explaining why it's terrible, often with contradictory reasons that don't hold up to scrutiny.

The common thread isn't logical consistency; it's shared disgust. We're not reasoning together. We're rationalizing together.

Business ethics often comes down to gut feelings dressed up as policy. When a CEO says "this doesn't feel right," they're not always articulating a clear principle. They're experiencing moral dumbfounding. Sometimes that intuition is wise, drawing on years of tacit knowledge. Other times it's biased, shaped by cultural assumptions that don't stand up to scrutiny.

The challenge is distinguishing between the two.

Not everyone buys Haidt's story. Psychologist Joshua Greene argues that moral reasoning can override intuition, especially when we engage what he calls controlled processing. His research shows that utilitarian judgments, the kind that require calculating consequences, recruit different brain regions than knee-jerk deontological judgments.

In Greene's Dual Process Model, intuitive responses are fast and emotional (like condemning the sibling scenario), while utilitarian reasoning is slow and deliberate (like accepting that sacrificing one to save five might be justified). The key is that the deliberative system can win, if given time and cognitive resources.

Paul Bloom goes further, arguing that reasoning can reshape our moral intuitions over time. Historical shifts on issues like slavery, women's rights, and LGBTQ+ equality didn't happen through pure emotion. They required arguments, evidence, and the slow work of persuading people that their gut reactions were wrong.

So the picture is more nuanced: intuition dominates in the moment, but reason can recalibrate our moral compass if we let it.

What feels obviously wrong to you might feel obviously fine to someone across the world. Anthropologist Richard Shweder identified three major moral frameworks that cultures emphasize differently:

The ethic of autonomy (dominant in Western liberal societies) prioritizes individual rights and harm prevention. If no one gets hurt, there's no foul.

The ethic of community emphasizes duty, hierarchy, and group loyalty. What matters is whether an act strengthens or weakens social bonds.

The ethic of divinity focuses on purity, sanctity, and the sacred. Some acts are wrong not because they harm anyone, but because they violate what's sacred.

The sibling scenario triggers dumbfounding in Western participants because it violates the ethic of divinity (sacred boundaries) but not the ethic of autonomy (no harm). In cultures where divinity is more central, the reaction is less confused; people can articulate the violation clearly.

This cross-cultural variation reveals that moral intuitions are partly learned, not purely innate. What's universal is the mechanism, the reliance on gut feelings before reasons. What varies is which gut feelings different cultures cultivate.

Here's how to recognize when your moral intuitions are running the show without rational backup:

1. You can't explain your position clearly. If you find yourself saying "it just is wrong" or "I can't explain it, but I know it's wrong," that's a red flag.

2. Your reasons shift when challenged. When one justification falls apart and you immediately reach for another unrelated reason, you're probably rationalizing.

3. You feel anger or disgust, not concern. Harm-based moral judgments tend to elicit concern and empathy. Purity-based judgments elicit disgust and anger. If you feel the latter, ask whether anyone is actually being harmed.

4. You judge the person, not just the act. If your moral verdict changes based on who did something rather than what they did, that's a sign social intuitions are overriding principle.

You can't eliminate moral intuitions, and you shouldn't try. They're often right, distilling wisdom from countless past experiences. But you can train yourself to recognize when intuition is leading you astray.

When you feel a strong moral reaction, pause. Name the emotion: "I'm feeling disgust" or "I'm feeling outrage." This simple act engages System 2, creating space between feeling and judgment.

Your brain will automatically search for reasons to support your gut feeling. Force it to do the opposite. Ask, "What would someone who disagrees with me say? What evidence would challenge my view?"

If the same act were committed by someone you admire, would you judge it the same way? If a political opponent proposed this policy, would you still support it? Reversals expose bias.

Moral dumbfounding thrives on speed. When you have to decide quickly, intuition wins. When you have time to deliberate, reason has a fighting chance. If the decision is important, sleep on it.

Expose yourself to different moral frameworks. Read arguments from people who weight Care, Fairness, Loyalty, Authority, and Sanctity differently than you do. You won't always be persuaded, but you'll understand the landscape better.

As we move further into the digital age, the stakes of moral dumbfounding are rising. Algorithms amplify outrage, social media collapses deliberation time, and polarization hardens intuitions into tribal identities.

But awareness is growing. Psychologists are developing interventions to help people recognize and mitigate intuitive biases. Moral elevation, the positive emotion we feel when witnessing acts of exceptional virtue, can motivate self-improvement and recalibrate our moral compass.

Educational programs are teaching students to distinguish feelings from facts, to recognize when emotions are being used as evidence. Businesses are creating ethics frameworks that force decision-makers to articulate principles, not just gut reactions.

The goal isn't to eliminate moral intuition. It's to create a healthy dialogue between the emotional dog and the rational tail, where each informs the other.

Understanding moral dumbfounding doesn't make you a moral relativist. It makes you a better moral thinker. You can still hold strong convictions, but you'll hold them more humbly, with awareness of their intuitive roots.

You'll be slower to condemn, quicker to seek understanding. You'll recognize that the person across the table isn't stupid or evil; they're operating from a different set of gut feelings, shaped by different experiences and cultural contexts.

And when you find yourself saying "I just know it's wrong," you'll pause. You'll ask whether that knowledge comes from wisdom or from disgust, from principle or from prejudice. You'll give reason a chance to catch up to intuition.

That won't make moral decisions easy. But it will make them better. In a world increasingly divided by moral certainty, the willingness to question our own gut feelings might be the most important skill we can cultivate.

Because sometimes, the hardest person to persuade is yourself. And the most important argument to win is the one between your intuition and your reason.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

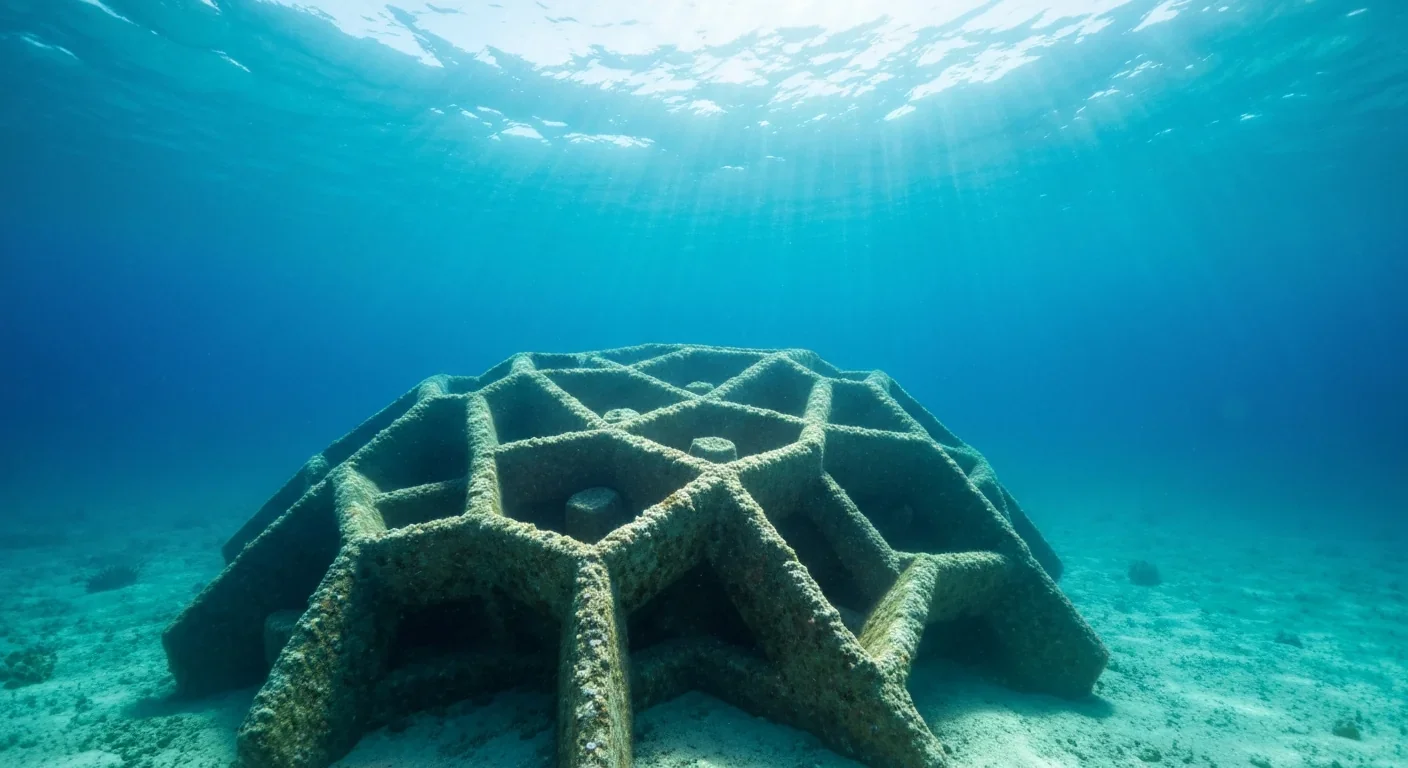

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

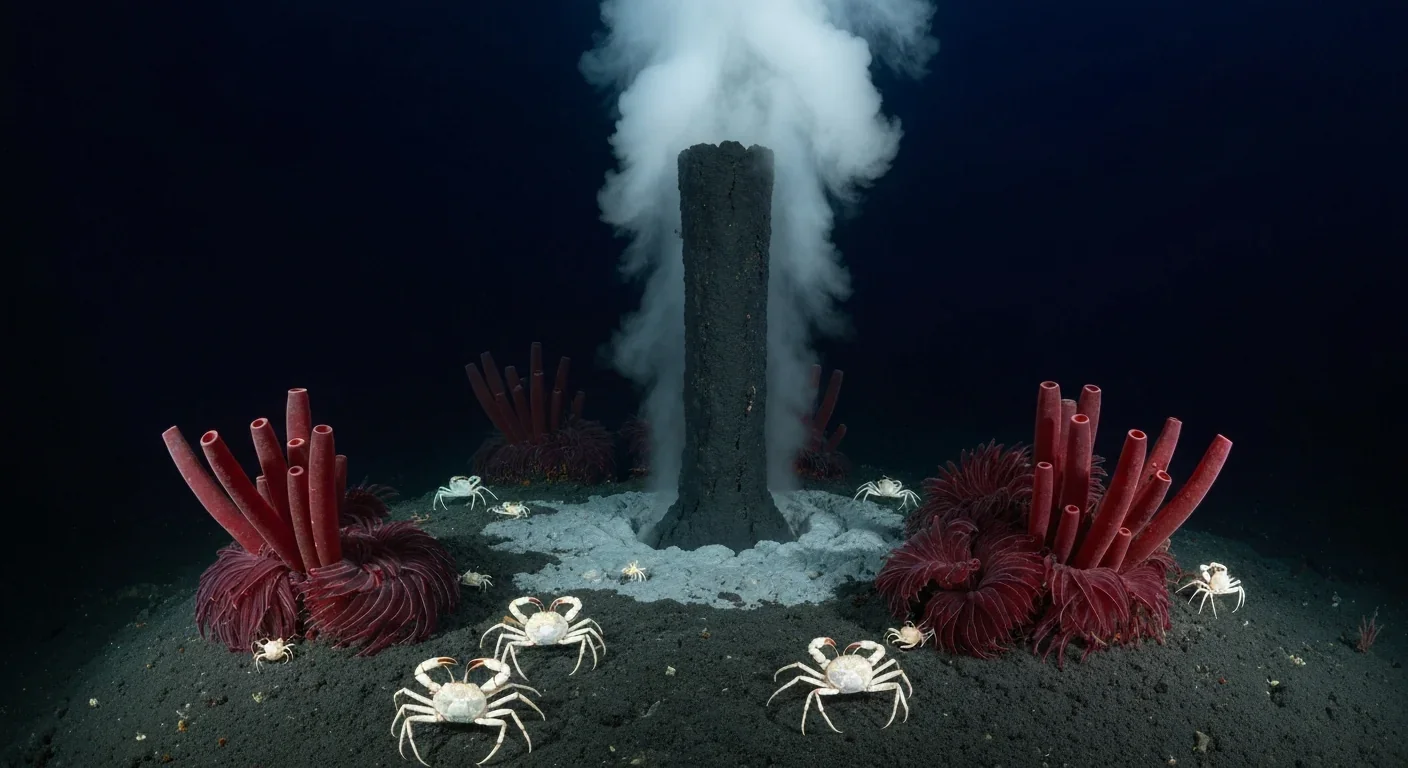

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

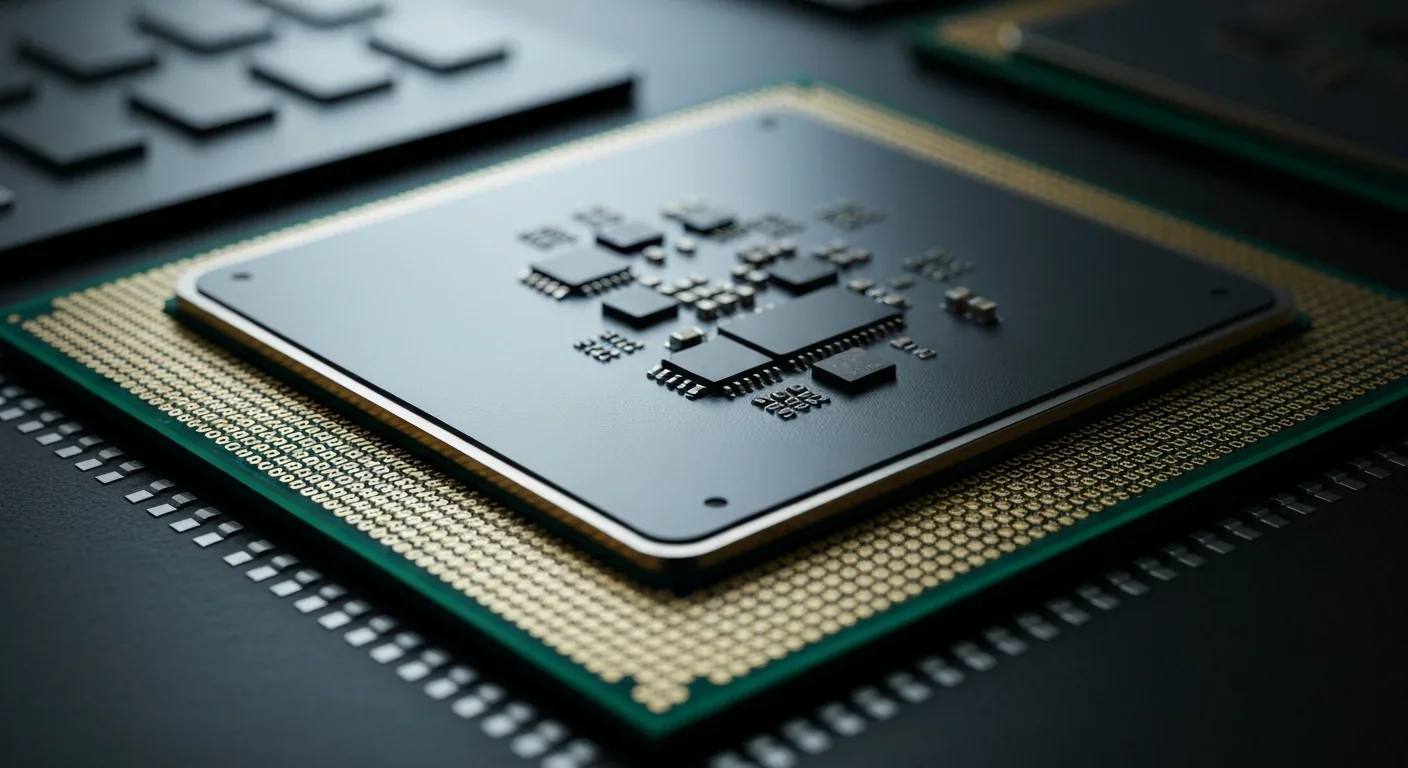

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.