Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: The third-person effect is a psychological bias where people believe media influences others more than themselves. This blind spot makes us vulnerable to manipulation, fuels censorship support, and undermines media literacy efforts.

You've probably done it. Scrolled past a political ad and thought, "Who falls for this stuff?" Maybe you've seen a friend share obvious clickbait and wondered how they didn't see through it. Here's the uncomfortable truth: you're probably just as susceptible to persuasion as anyone else. You just can't see it happening to you.

This blind spot has a name. Psychologists call it the third-person effect, and it's one of the most consistent findings in media research. Since W. Phillips Davison first documented it in 1983, hundreds of studies have confirmed the same pattern: people systematically overestimate how much media influences others while underestimating its effect on themselves.

The implications go way beyond academic curiosity. This bias shapes how we consume news, engage with social media, and participate in democracy. Understanding it isn't just intellectually interesting; it's practically essential for anyone trying to think critically in an age of information overload and manipulation.

Davison's original insight came from an unusual source: World War II propaganda operations in the Pacific. Japanese forces had dropped leaflets over Allied lines, trying to demoralize soldiers. What caught Davison's attention wasn't whether the propaganda worked, but how people reacted to it. Soldiers didn't worry about the leaflets affecting themselves. They worried about the effect on others.

This pattern showed up everywhere Davison looked. When he surveyed people about media influence, the same dynamic repeated: "Sure, advertising works on other people, but I see through it." "Those negative political ads might sway voters, just not me." "Fake news is a problem because it fools everyone else."

The research that followed painted a remarkably consistent picture. Reid and Soley demonstrated it with advertising in 1982. Study after study replicated the finding across different media, different messages, and different cultures. By now, the effect has been documented in over 200 published studies.

What makes this particularly fascinating is that it happens even when people know about the bias. Telling someone "you're probably underestimating media's influence on you" doesn't make the effect go away. The blind spot persists.

Walk through a typical day and you'll encounter the third-person effect constantly. You see a sponsored post on Instagram. Your immediate thought? "I know this is an ad, so it won't work on me." Meanwhile, you've just spent three minutes looking at products you didn't know existed five seconds ago.

Political campaigns exploit this relentlessly. The Cambridge Analytica scandal revealed how microtargeted political ads used psychographic profiling to influence voters. When the story broke, most people's reaction was predictable: outrage that other voters had been manipulated. Very few people considered whether they themselves had been targets.

Recent research on social media makes the pattern even clearer. A 2015 study by Antonopoulos and colleagues found what they called the "Web Third-Person Effect". The more shares and engagement a piece of content received, the more people believed it influenced others. But personal susceptibility? That stayed constant regardless of how viral something went.

The effect appears across virtually every type of media content. Violence in movies and video games. Sexualized advertising. Fake news and misinformation. Hate speech online. In each case, people consistently judge themselves as more resistant than the average person.

Here's where it gets weird: even positive messages trigger the effect, just in reverse. When researchers studied public service announcements or educational content, people often thought the beneficial messages would help others more than themselves. The bias isn't about negativity; it's about perceived vulnerability.

So why can't we see our own susceptibility? The answer involves several psychological mechanisms working together, and none of them makes us look particularly rational.

First, there's ego protection. Admitting you're influenced by propaganda or manipulation feels like admitting you're gullible. That threatens your self-image as a smart, independent thinker. It's psychologically easier to believe that you're the exception, that your critical thinking skills make you immune.

This connects to what psychologists call self-enhancement bias. We consistently rate ourselves as above average on desirable traits. Most people think they're better-than-average drivers, smarter than typical, and yes, more resistant to persuasion. Mathematically, this is obviously impossible, but our brains don't care about math when protecting our egos.

Second, there's the visibility problem. You can see when other people change their opinions or buy products after exposure to media. You can't easily observe the same process in yourself. Your own thought processes feel natural and internally generated, even when they're actually responding to external influences.

Think about how advertising works. You don't watch a car commercial and immediately decide to buy that car. Instead, weeks later when you're car shopping, certain brands just "feel right" or "come to mind first." The influence is real but invisible. Meanwhile, when your friend buys the exact car from that commercial, the connection seems obvious.

Third, we use different standards for ourselves and others. Research by Perloff and others has shown that perceived message desirability strongly moderates the effect. When we judge media's impact on ourselves, we focus on our intentions and critical thinking. When judging impact on others, we focus on the message's persuasive power.

This explains why the effect is stronger for content we consider undesirable. Violent media, negative political ads, or obvious propaganda trigger stronger third-person perceptions. We're especially motivated to believe that while this bad content might harm others, we're sophisticated enough to resist it.

Social distance amplifies everything. The more different from us someone seems, the more we assume media will affect them. People think media influences their community less than strangers, their friends less than their community, and themselves least of all. It's not just about ego; it's about perceived similarity.

Recent neuroscience research hints at even deeper mechanisms. A 2024 study found that when people evaluated media influence on themselves versus others, different brain regions activated. Self-evaluation engaged areas associated with introspection and self-reflection. Evaluating others activated regions linked to theory of mind and social judgment. We're literally using different cognitive processes.

This isn't just an interesting quirk of human psychology. The third-person effect has serious implications for how we navigate information ecosystems, especially in the political sphere.

It fuels support for censorship and media restrictions. If you believe that harmful content influences others but not you, you're more likely to support banning or restricting that content. Multiple studies have documented this "third-person effect on behavioral intentions." People who strongly perceive media effects on others are more willing to support speech restrictions, content moderation, and regulatory interventions.

This creates a troubling dynamic. The same person who's outraged that "the government wants to control what I can see" will simultaneously support restrictions on content that might influence "those other people who can't think for themselves." The bias makes us simultaneously libertarian about our own media consumption and paternalistic about everyone else's.

It makes us vulnerable to the very manipulation we think we're spotting. When you're convinced you're immune to propaganda, you stop looking for it in your own information diet. Research on misinformation susceptibility shows that overconfidence in your ability to spot fake news actually correlates with worse performance in identifying it.

This plays out dramatically in political polarization. Both sides of any political divide think the other side is brainwashed by biased media. Both sides think their own media consumption is appropriately critical and well-informed. The symmetry is almost perfect, and it makes political discourse nearly impossible.

It undermines media literacy efforts. Teaching people about propaganda techniques or media bias often backfires. Armed with knowledge about how media manipulates, people get better at spotting manipulation in media they already disagree with. But they don't improve at spotting it in sources that confirm their existing views. The third-person effect means media literacy training can actually increase polarization.

The 2024 presidential campaign provided countless examples. Voters across the political spectrum consumed partisan media, convinced they were seeing through bias while others were being misled. Post-election surveys showed that both winning and losing coalitions substantially overestimated how much "the other side" had been influenced by propaganda while minimizing influence on their own coalition.

Social media amplifies the effect dramatically. Platforms create echo chambers where everyone shares similar content, making it harder to notice when your own views are shifting. Meanwhile, you can easily observe the "crazy stuff" people in other bubbles are sharing. This asymmetry of visibility reinforces the third-person effect.

Studies on how fake news spreads have found that people are quite good at identifying false information from opposing viewpoints but terrible at catching it from sources they trust. The third-person effect isn't just failing to protect us from misinformation; it's actively helping false information spread within ideological communities.

So how do you counteract a bias you can't see? The research suggests several strategies, though none of them are easy.

Start with intellectual humility. The most robust finding across studies is that the third-person effect is weaker among people who score high on intellectual humility scales. These are people who can say "I might be wrong" without feeling like they're admitting defeat. They're comfortable with uncertainty and willing to update their beliefs.

Practically, this means regularly asking yourself uncomfortable questions. "What if I'm the one who's wrong about this?" "What if the sources I trust are actually biased?" "What information might I be missing?" These questions feel unnatural because our brains resist them, which is exactly why they're necessary.

Seek out genuine disagreement. Not performative disagreement where you debate people you think are obviously wrong. Real, substantive engagement with smart people who see things differently. This is hard to do on social media, where algorithms feed you content designed to trigger engagement through outrage.

Research on media bias perception shows that exposure to diverse viewpoints can reduce the third-person effect, but only if the exposure is genuine. Hate-reading the opposing side's worst takes doesn't count. You need to engage with their best arguments, presented by their most thoughtful advocates.

Focus on behavior, not intentions. Instead of asking "Am I biased?", track what you actually do. What sources do you share? What content do you engage with? What topics make you stop scrolling? Your behavior reveals influences that your introspection misses.

Keep a media diary for a week. Every time you share something or spend more than a minute reading something, write it down. At the end of the week, look for patterns. You'll probably be surprised by how predictable your consumption is, how much it clusters around certain topics or viewpoints, and how rarely you actually engage with content that challenges your existing beliefs.

Embrace the discomfort of being influenced. The third-person effect thrives on the assumption that being persuaded is bad. But changing your mind based on good evidence is exactly what rational people should do. The goal isn't to be immune to influence; it's to be influenced by the right things for the right reasons.

This reframing helps in practical ways. When you notice advertising working on you, instead of feeling embarrassed, get curious. "Huh, that ad made me want this product. How did it do that? What psychological buttons did it push?" Understanding your own susceptibility makes you better at navigating it.

Design your information environment deliberately. You can't eliminate bias, but you can structure your media consumption to reduce its worst effects. Follow people who regularly change their minds. Subscribe to publications that challenge your assumptions. Build friction into sharing, so you have to pause before amplifying content.

Recent research on media literacy in schools suggests that the most effective programs focus less on identifying bad information and more on cultivating good information habits. Teach people to pause before sharing. Encourage them to verify claims before accepting them. Build practices that slow down reactive information consumption.

Collaborate on fact-checking. Research shows the third-person effect is weaker when people work together to evaluate information. Having to explain your reasoning to someone else activates different cognitive processes than just consuming information alone. You become more aware of your own assumptions and more willing to consider alternatives.

This can be as simple as talking through news stories with friends before forming strong opinions. "Here's what I think this article is saying. What am I missing?" It feels inefficient compared to just scrolling and reacting, but it's dramatically more effective at reducing bias.

The third-person effect isn't going away. It's probably hardwired into how we think about ourselves versus others, a side effect of the cognitive processes that help us maintain self-esteem and navigate social environments. But understanding it gives us tools to work around it.

The next time you see propaganda and think "who falls for this?", pause. The answer might be you. Not because you're stupid or gullible, but because you're human. Media influence works precisely because it's invisible to the person being influenced.

This isn't cause for despair. It's cause for appropriate caution. The most dangerous bias is the one you can't see. The third-person effect makes us blind to our own susceptibility, but awareness of the bias creates at least a partial cure. You can't eliminate the blind spot entirely, but you can learn to navigate around it.

In an age of algorithmic manipulation, microtargeted advertising, and sophisticated propaganda, that navigation skill isn't optional anymore. It's essential. The people who will thrive in modern information ecosystems aren't the ones who think they're immune to influence. They're the ones who understand how influence works, including on themselves.

Start by admitting you're not special. Your critical thinking skills are probably about average. Your susceptibility to persuasion is probably normal. The media you consume influences you in ways you don't fully recognize. These aren't shameful admissions. They're the foundation for actually thinking clearly about the information you encounter every day.

Because here's the final twist: reading this article hasn't made you immune to the third-person effect. Even now, you probably think it applies more to others than to you. That's how persistent the bias is. The best we can do is stay humble, stay curious, and keep questioning our own certainty. In a world of information warfare, that might be the only real defense we have.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

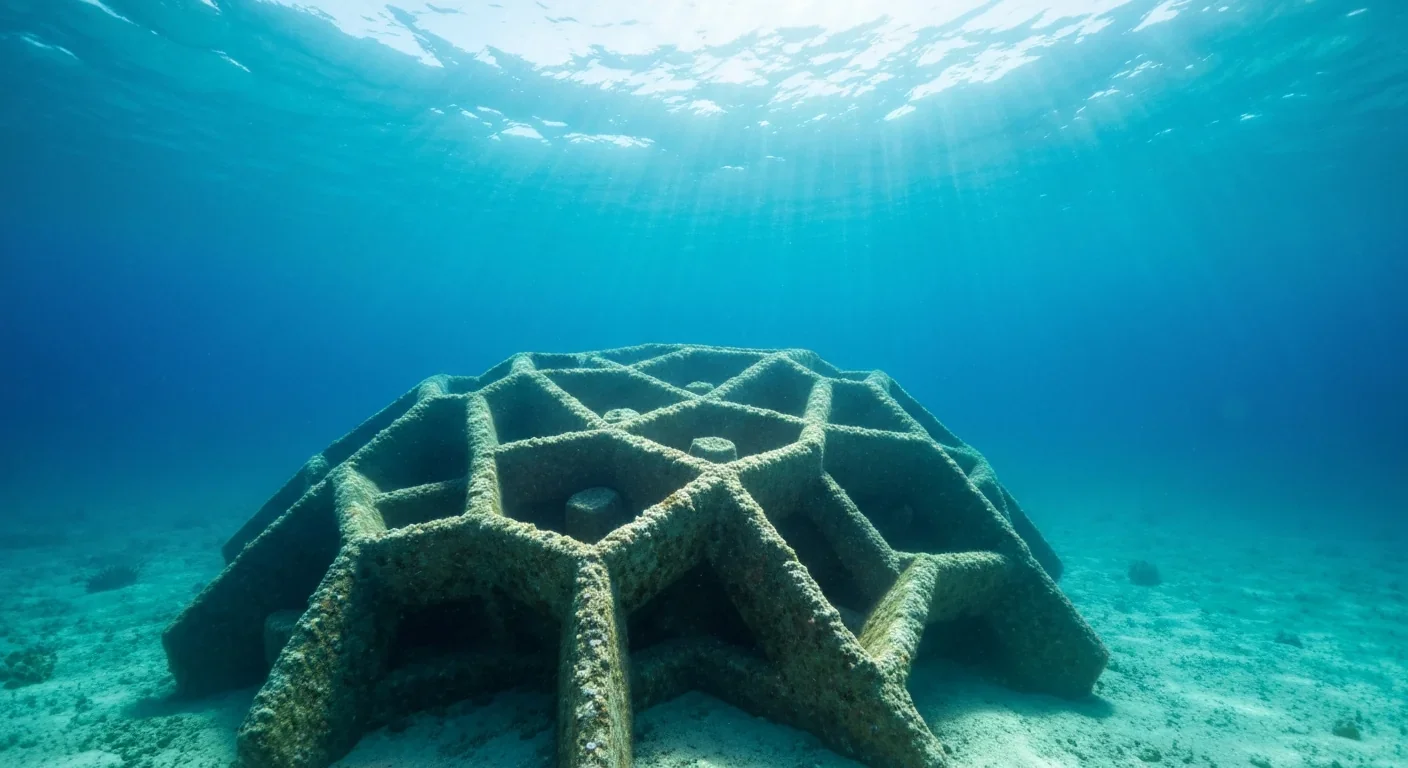

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

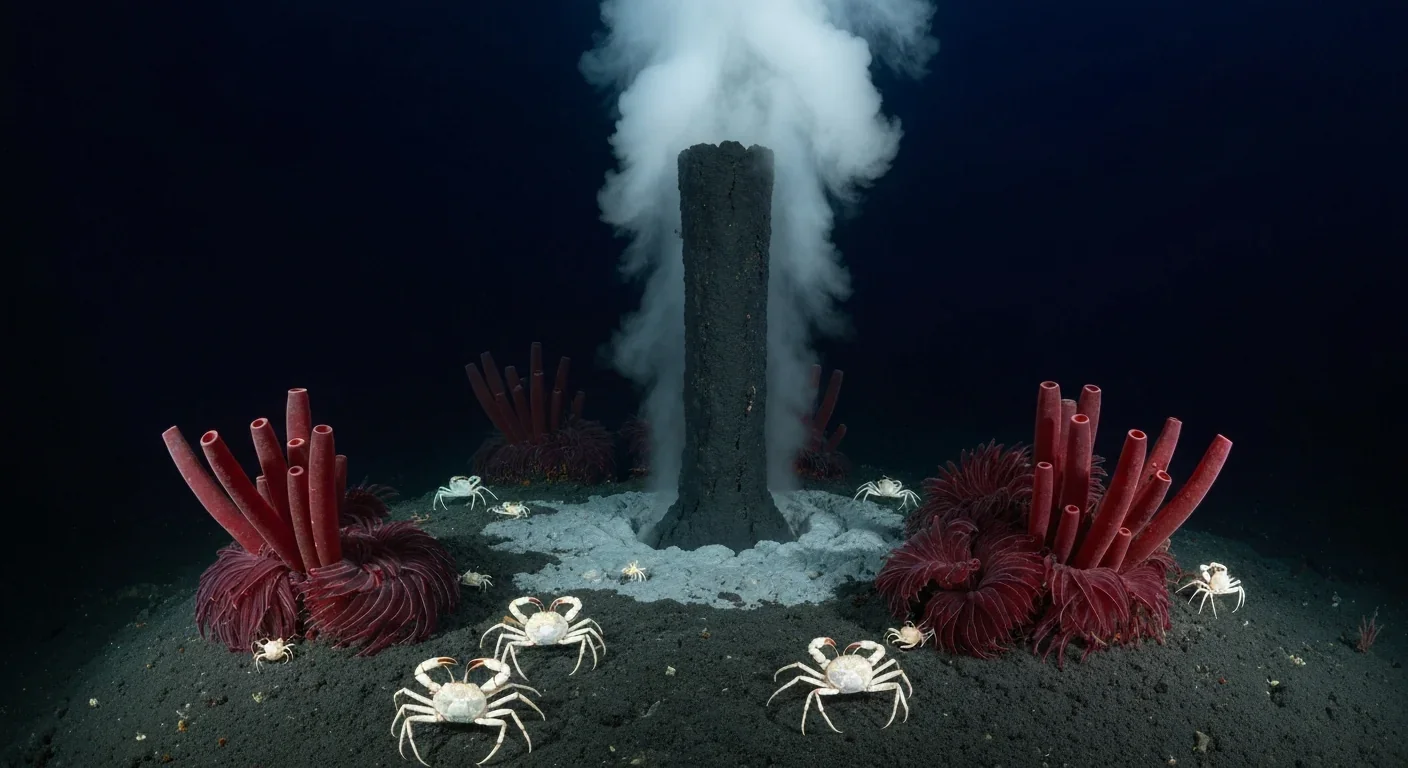

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

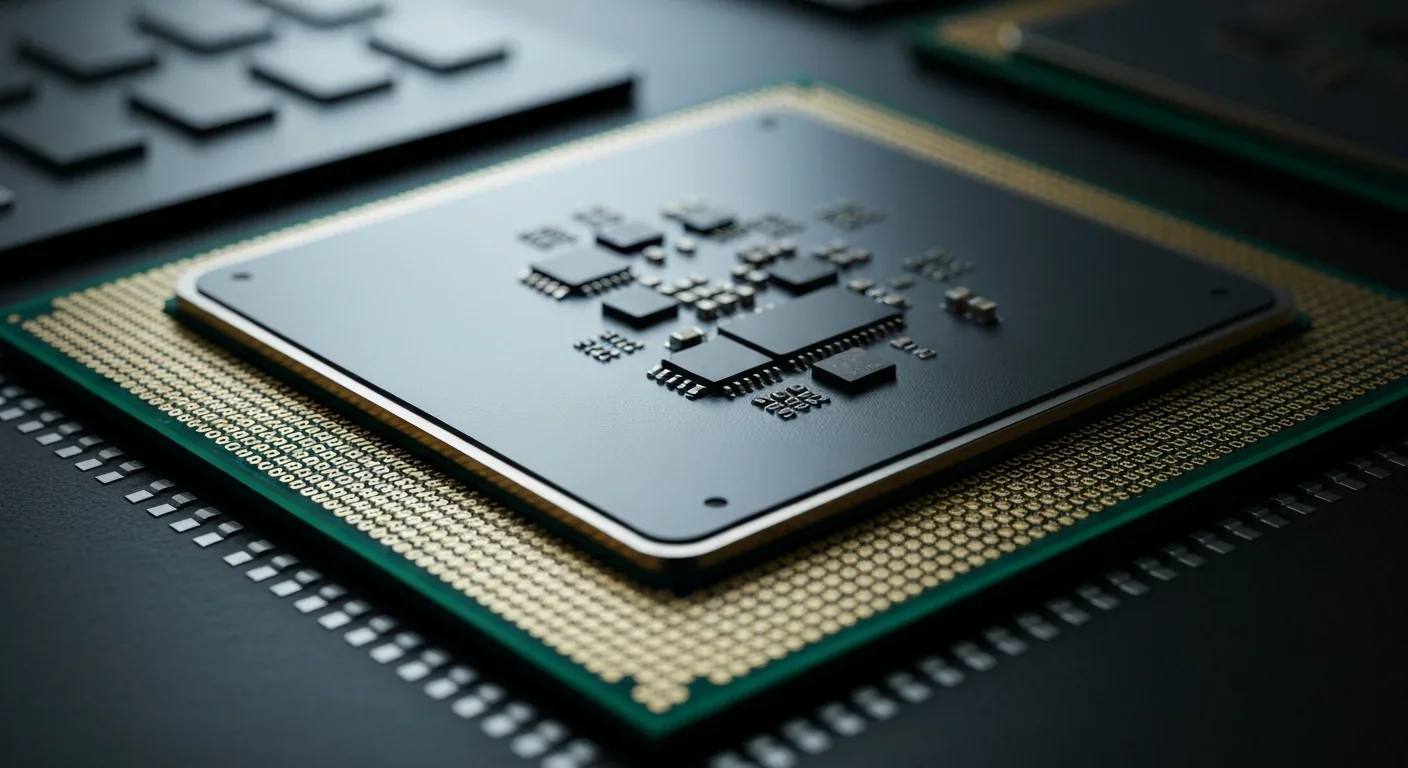

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.