Why We Pick Sides Over Nothing: Instant Tribalism Science

TL;DR: Your brain's reward circuitry, memory systems, and social cognition are optimized for certainty and belonging - not truth. Understanding the neuroscience behind confirmation bias, false memories, and tribal thinking can help you recognize when your mind misleads you and develop strategies to think more critically.

Imagine your brain as a relentless fact-checker that gets its deepest satisfaction not from finding truth, but from confirming what it already believes. Every time you encounter information that aligns with your existing views, a cascade of dopamine floods your reward circuits. You feel good. You feel validated. And your brain says, "Let's do that again."

This isn't a bug in human cognition. It's a feature, one that helped our ancestors survive on the savanna but now keeps us trapped in ideological bubbles, clinging to beliefs that facts can't dislodge. The neuroscience behind why we stick to false ideas reveals a troubling reality: our brains are optimized for certainty and belonging, not truth.

At the heart of belief formation lies the mesocorticolimbic dopamine system, a network connecting the ventral tegmental area (VTA) to the nucleus accumbens and prefrontal cortex. When you encounter information that confirms what you already think, this circuit lights up like a slot machine hitting jackpot.

Dopamine neurons in the VTA increase their firing rate when a reward is anticipated. For beliefs, the "reward" is cognitive consonance - that comfortable feeling when new information fits neatly into your mental model. Research shows this same pathway activates during feeding, sex, and social bonding. Your brain treats belief confirmation as a survival-critical reward.

The prefrontal cortex, which should act as your rational gatekeeper, gets hijacked by this process. Instead of objectively evaluating evidence, it becomes a motivated lawyer, selectively gathering data to defend the position your reward system already favors. This is why presenting someone with contradictory facts often backfires - you're not just challenging their ideas, you're threatening their neurochemical reward.

Memory isn't a video recorder. It's more like a Wikipedia page that anyone with access can edit, and your brain has extremely lax security protocols. Every time you recall a memory, you reconstruct it from fragments, filling gaps with inferences, expectations, and current beliefs.

The hippocampus, your brain's memory indexing system, activates similarly for both true and false memories. Professor David Gallo at the University of Chicago explains: "Both true memories and false memories will tend to activate the hippocampus." This means your brain can't reliably distinguish between what actually happened and what you've convinced yourself happened.

False memories strengthen through a process called reconsolidation. Each time you recall something - accurately or not - you re-encode it, potentially adding new distortions. If you encountered misinformation while emotionally aroused (thanks, social media), your amygdala tagged it as important, making the false memory even stickier.

Sleep deprivation makes this worse. Studies show that people who are sleep-deprived during event encoding develop false memories at higher rates than rested individuals. Your exhausted brain becomes an even less reliable narrator.

Associate Professor Wilma Bainbridge notes, "We have to do time-saving, efficient tricks." Your brain prioritizes speed over accuracy, which served our ancestors well when deciding whether that rustling in the grass was wind or a predator. But in the information age, this shortcut makes you vulnerable to manipulation.

Repetition is truth's evil twin. The illusory truth effect describes how statements become more believable simply through repeated exposure. You don't need to consciously remember hearing something before - mere familiarity breeds acceptance.

Research demonstrates this effect persists even when people are explicitly warned about false information and even across different languages and scripts. Your brain's processing fluency - how easily information flows through your neural networks - gets mistaken for truth. If something feels familiar, your mind assumes it must be accurate.

This mechanism explains why propaganda works through volume rather than sophistication. Repeat a lie often enough, and people's brains start processing it more smoothly. That smooth processing triggers a subtle feeling of rightness that gets interpreted as validity.

Professor David Gallo warns: "Do you think something sounds accurate because you read it a lot, or because you actually have multiple pieces of evidence for it?" Most people can't honestly answer that question about their own beliefs.

Confirmation bias isn't just preferring information that supports your views - it's an active distortion of how you process reality. Your brain doesn't neutrally evaluate evidence and then form conclusions. It forms conclusions first (often unconsciously) and then recruits evidence as post-hoc justification.

Motivated reasoning takes this further. When your identity or emotions are tied to a belief, your cognitive machinery shifts into defensive mode. The prefrontal cortex, instead of thinking critically, becomes an attorney building a case. You notice flaws in opposing arguments while overlooking glaring holes in your own position.

Neuroscientists recently discovered that political myside bias activates distinct brain regions. When evaluating statements aligned with their political identity, participants showed increased activity in areas associated with reward processing and decreased activity in regions linked to cognitive control.

The Conversation reports that cognitive biases and brain biology create a powerful barrier to changing minds. Facts alone can't penetrate because your brain treats belief challenges as threats, triggering defensive rather than analytical thinking.

Incompetence breeds confidence. The Dunning-Kruger effect describes how people with limited knowledge in a domain systematically overestimate their expertise. Ironically, the skills needed to be good at something are often the same skills needed to recognize you're not good at it.

This isn't about intelligence. It's about metacognition - your ability to accurately assess your own thinking. Someone with minimal understanding of climate science, economics, or virology lacks the mental framework to recognize the vast complexity they don't grasp. Psychology Today notes that this effect creates a double curse: not only do people fail to recognize their incompetence, they actively resist information that would illuminate their blind spots.

For false beliefs, this means the people most confident they're right are often the least equipped to evaluate the evidence. Their brains generate a feeling of understanding - smooth, coherent narratives that feel complete - while missing entire dimensions of the problem.

Humans evolved in small groups where belonging meant survival. Your brain still treats group membership as existential. When beliefs become markers of tribal identity, changing your mind feels like betrayal.

The in-group favoritism bias makes you view your group's members as more trustworthy, intelligent, and moral. Information from in-group sources gets processed with less skepticism. Psychology Today explores how in-group and out-group dynamics shape perception: your brain literally processes faces, voices, and arguments from perceived outsiders differently.

Echo chambers amplify this effect. Research on how echo chambers affect the brain shows that sustained exposure to homogeneous viewpoints strengthens existing neural pathways while weakening connections that would allow consideration of alternatives. Your brain physically rewires itself to reject dissenting information.

Social media algorithms exploit this biology ruthlessly. They've learned that keeping you inside your ideological bubble maximizes engagement. Every click, like, and share trains the algorithm to feed you more of what your reward system craves. You're not choosing your beliefs - you're being farmed for dopamine hits.

Sometimes, confronting people with facts makes them believe falsehoods more strongly. The backfire effect occurs when corrections to misinformation actually reinforce the original false belief.

This happens because your brain's threat detection system can't distinguish between physical and ideological danger. When core beliefs are challenged, your amygdala activates as if you're facing a predator. In this heightened state, the prefrontal cortex's analytical capabilities diminish. You're literally too threatened to think clearly.

Belief perseverance describes how beliefs persist even after the evidence supporting them has been completely discredited. Once your brain has constructed a narrative and reinforced it through the reward system, that neural pathway becomes the default. Contradictory information gets processed as anomalous noise rather than valid signal.

This creates a paradox: the more important an issue is, the harder it becomes to evaluate rationally. High stakes trigger emotional systems that override analytical thinking.

Understanding the neuroscience of belief doesn't make you immune, but it provides tools to recognize when your brain is misleading you.

Question your certainty. When you feel absolutely sure about something, especially regarding complex topics, that's a red flag. Genuine expertise comes with awareness of nuance and uncertainty. Practice intellectual humility by asking: "What would I need to see to change my mind on this?"

Seek out cognitive dissonance. Your brain avoids discomfort, but growth happens in that uncomfortable space. Deliberately expose yourself to well-reasoned arguments from people who disagree. Not inflammatory social media posts - substantive analysis from credible sources. Notice your emotional reaction and sit with it rather than immediately dismissing the challenge.

Distinguish familiarity from truth. Professor Gallo's question is crucial: Do you believe something because you've encountered it repeatedly, or because you've evaluated actual evidence? The illusory truth research shows that even awareness of this bias doesn't eliminate it, but metacognitive strategies can reduce its influence.

Examine your sources' incentives. Who benefits if you believe this? Follow the money, the attention, the power. Motivated reasoning isn't unique to "the other side" - your preferred sources have incentives too. Diversify your information diet beyond echo chambers.

Understand cognitive biases. You can't eliminate biases - they're built into your neural architecture - but you can learn to spot them. Familiarize yourself with common ones: availability bias (overweighting recent or memorable examples), anchoring (overrelying on first information encountered), and the sunk cost fallacy.

Practice epistemic humility. Build the metacognitive skill of recognizing the limits of your knowledge. For any belief you hold, try articulating: "Here's what I know. Here's what I think I know but could be wrong about. Here's what I don't know." This framework creates space for updating beliefs when new evidence emerges.

Slow down your thinking. The brain's fast, automatic System 1 thinking is where biases flourish. When encountering important claims, deliberately engage slower, more analytical System 2 thinking. Ask: What's the evidence? Who conducted the research? What are alternative explanations?

Check emotional reactions. If information makes you feel righteously angry, smugly superior, or tribally validated, your amygdala and reward system may be driving the bus. Strong emotional responses are often flags that identity-protective cognition has been activated. Pause before sharing or forming conclusions.

Cultivate curiosity over certainty. Reframe belief revision as intellectual growth rather than defeat. Scientists celebrate when their hypotheses are disproven - it means they learned something. Adopting a "scientist mindset" in everyday life means treating beliefs as hypotheses to test rather than fortresses to defend.

We're living through an unprecedented challenge to collective truth. Artificial intelligence can now generate convincing fake images, videos, and text. Deepfakes and synthetic media will make the illusory truth effect exponentially more powerful. Your brain's already unreliable fact-checking machinery is about to face industrial-scale deception.

But understanding the neuroscience offers hope. Media literacy education that incorporates how brains process information can build cognitive immune systems. Teaching people about the reward circuitry of belief, the illusory truth effect, and motivated reasoning gives them tools to recognize manipulation.

Technology may also provide solutions. AI fact-checking systems that flag suspicious claims before they achieve repetition-driven credibility. Browser extensions that diversify your information diet and highlight echo chamber effects. Neurofeedback tools that help people recognize when emotional systems have hijacked analytical thinking.

The most important shift may be cultural. Currently, changing your mind is often viewed as weakness - flip-flopping, being inconsistent, lacking conviction. We need to rebrand belief revision as intellectual courage. The willingness to update your views in light of new evidence should be celebrated as a mark of cognitive maturity, not derided as uncertainty.

The same neuroplasticity that allows false beliefs to take root enables you to rewire those pathways. Every time you consciously override a bias, question a certainty, or genuinely consider an opposing view, you're strengthening neural circuits for critical thinking.

This isn't about becoming a purely rational being - that's neither possible nor desirable. Emotions and intuitions serve valuable functions. But you can develop better metacognition, learning to recognize when your brain's shortcuts are leading you astray.

The battle for truth in the 21st century will be fought not just in laboratories and newsrooms, but in the three pounds of neural tissue between your ears. Your brain wants to mislead you - it's following ancient programming optimized for survival, not accuracy. Recognizing that is the first step toward reclaiming agency over what you believe and why.

The question isn't whether your brain will try to deceive you. It will. The question is whether you'll develop the awareness and tools to catch it in the act.

Ahuna Mons on dwarf planet Ceres is the solar system's only confirmed cryovolcano in the asteroid belt - a mountain made of ice and salt that erupted relatively recently. The discovery reveals that small worlds can retain subsurface oceans and geological activity far longer than expected, expanding the range of potentially habitable environments in our solar system.

Scientists discovered 24-hour protein rhythms in cells without DNA, revealing an ancient timekeeping mechanism that predates gene-based clocks by billions of years and exists across all life.

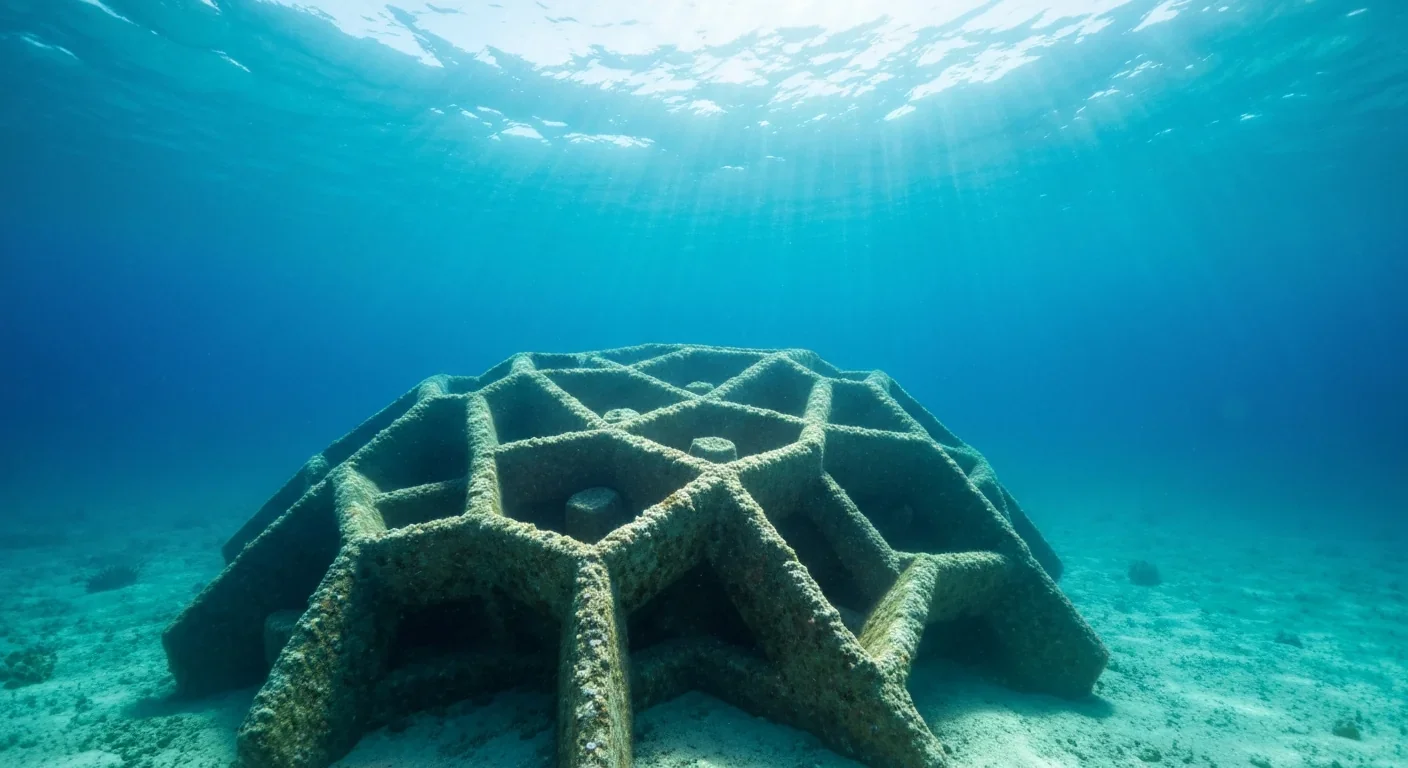

3D-printed coral reefs are being engineered with precise surface textures, material chemistry, and geometric complexity to optimize coral larvae settlement. While early projects show promise - with some designs achieving 80x higher settlement rates - scalability, cost, and the overriding challenge of climate change remain critical obstacles.

The minimal group paradigm shows humans discriminate based on meaningless group labels - like coin flips or shirt colors - revealing that tribalism is hardwired into our brains. Understanding this automatic bias is the first step toward managing it.

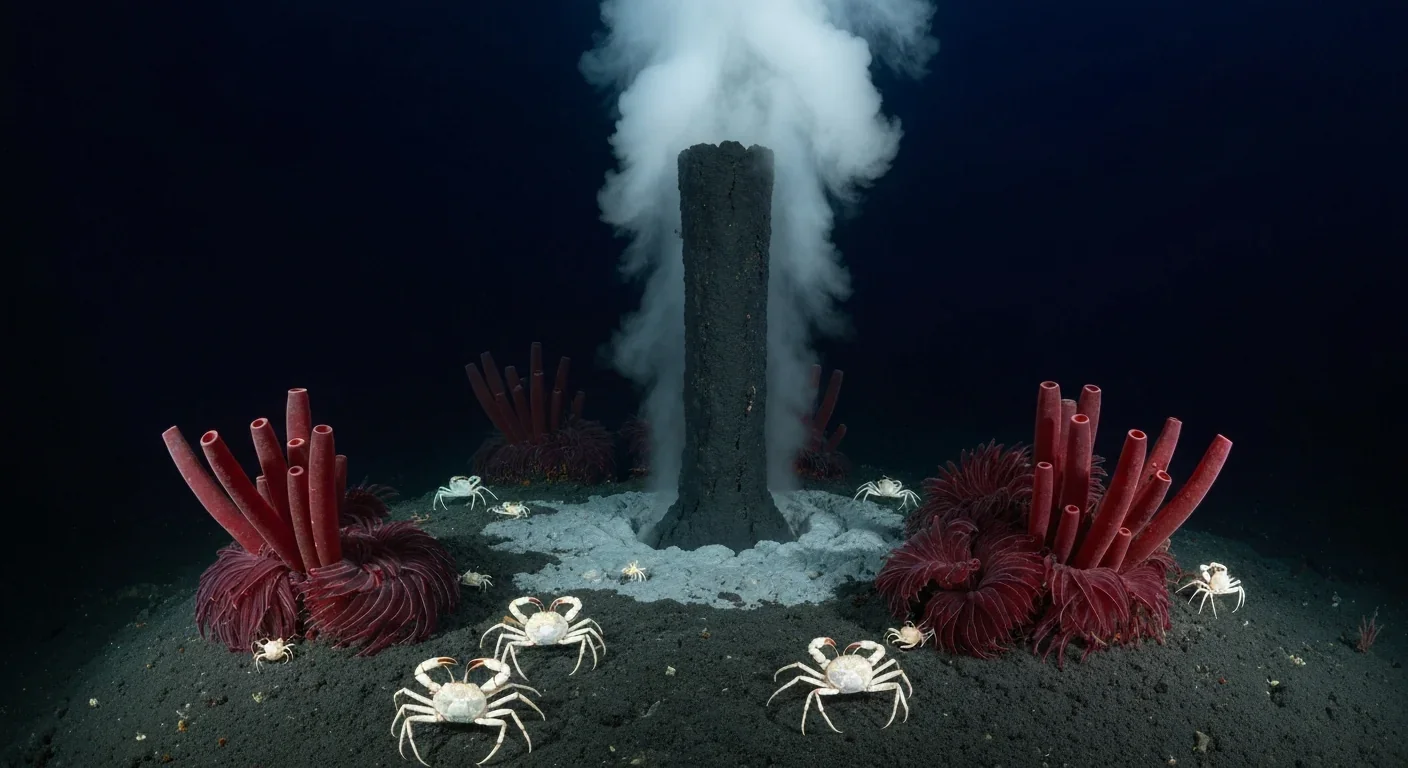

In 1977, scientists discovered thriving ecosystems around underwater volcanic vents powered by chemistry, not sunlight. These alien worlds host bizarre creatures and heat-loving microbes, revolutionizing our understanding of where life can exist on Earth and beyond.

Automated systems in housing - mortgage lending, tenant screening, appraisals, and insurance - systematically discriminate against communities of color by using proxy variables like ZIP codes and credit scores that encode historical racism. While the Fair Housing Act outlawed explicit redlining decades ago, machine learning models trained on biased data reproduce the same patterns at scale. Solutions exist - algorithmic auditing, fairness-aware design, regulatory reform - but require prioritizing equ...

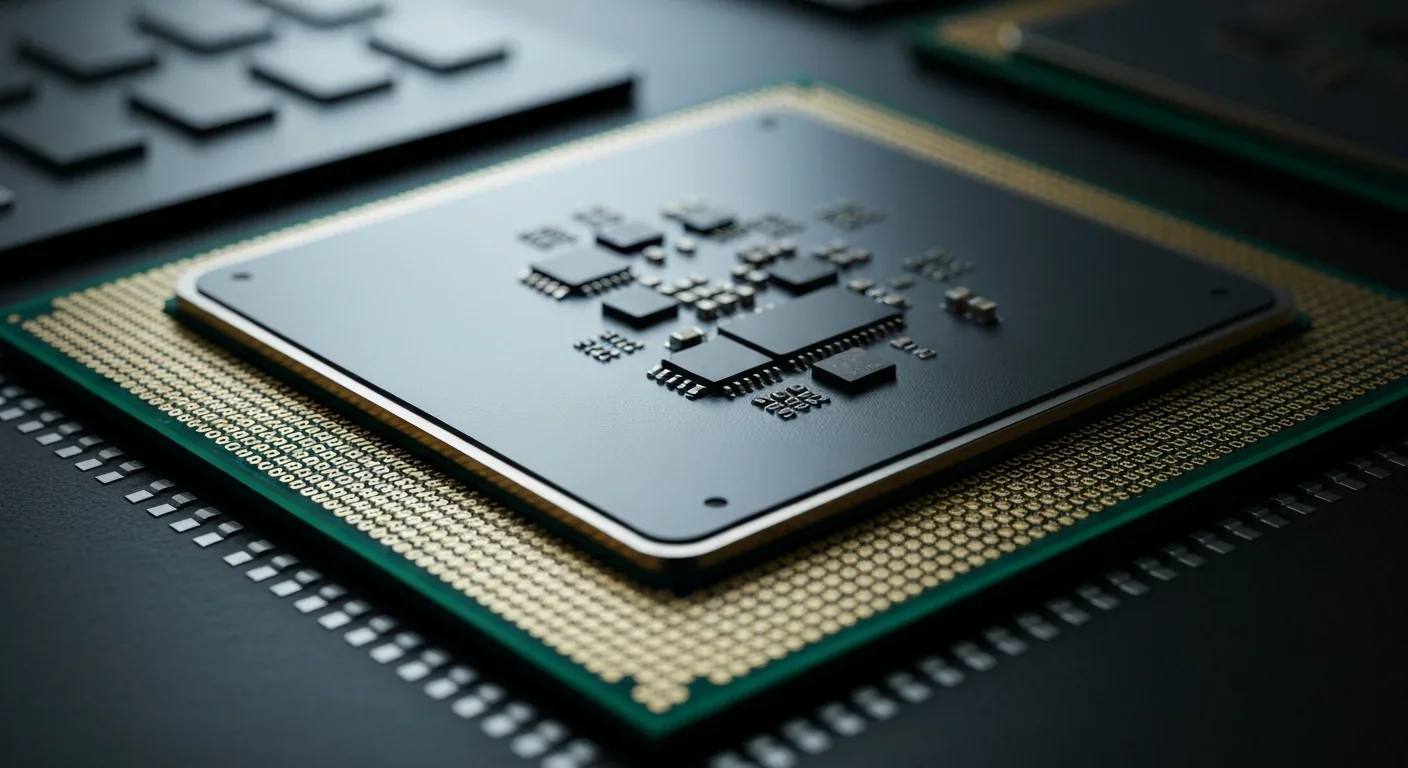

Cache coherence protocols like MESI and MOESI coordinate billions of operations per second to ensure data consistency across multi-core processors. Understanding these invisible hardware mechanisms helps developers write faster parallel code and avoid performance pitfalls.